F is for F* off to fake news

An EU co-funded project has developed tools to check the factuality of user-generated content, social media posts and online video. They’re already being used by newsrooms worldwide to fight back against fake news It’s the era of fake news, they say, where all information is suspect. But news organisations, software developers and international institutions are fighting back with a growing array of tools and techniques to help sort the facts from the falsehoods and the meaning from the manipulation. The European Union’s Horizon 2020 Programme was the biggest EU Research and Innovation programme ever, making nearly €80 billion of funding available over seven years, from 2014 to 2020. In January 2016, funded by Horizon 2020, the InVID project launched. A consortium of tech companies, broadcasters and institutions joined forces to develop a set of tools for video verification. This has resulted in a suite of tools which include a free browser plug-in to help everyone from journalists to amateur fact-finders to verify online media. “We had previously been involved in a project called Reveal,” says Jochen Spangenberg, Innovation Manager at German public international broadcaster Deutsche Welle, one of the partners in the InVID consortium. “We worked on algorithm-supported analysis and verification of digital content, as well as other legal issues about how to approach and use social media. One of the outcomes of that project was that algorithm-supported video verification is still quite a big challenge on its own.”

Spangenberg works in Deutsche Welle at Research and Cooperations Project which participates in future-looking technology projects for broadcast, especially around IP, and has been involved in the InVID project since its inception. Building the knowledge gained with the Reveal project, the consortium, which includes European broadcasters Deutsche Welle and AFP, Spain’s University de Lleida, and several private companies and research organisations, began R&D on easy-to-use components that could help identify fake or misleading content. The first of these made publically available was was the InVID browser plug-in. Plugging into the facts The InVID plug-in is available for free as an extension to the Chrome and Firefox browsers. Users can insert URLs for videos or images into the toolkit or upload local files and get back a plethora of data about the material, its origins and its authenticity. At its most basic, the InVID dashboard offers an analysis tool that returns the metadata attached to a video. In the case of YouTube, it returns the video’s title, description, view count, upload time, thumbs up & down, number of comments and channel data, among other parameters.

If you find that components or keyframes of a video have appeared two weeks ago, or two years ago, then it’s fairly likely that this is not original contentInVID also automatically extracts keyframes from every shot of a video, as well as searching for matching thumbnails that might be available elsewhere around the web. It also allows very time-specific searches on Twitter posts, which can help determine exactly when the video might have been previously tweeted. Posting old or out of context videos for misinformation purposes, or even as clickbait, has become more frequent internationally around major news events or socially-charged issues. Being able to quickly identify their origin helps to clear the way for actual reporting. “If you find that components or keyframes of a video have appeared two weeks ago, or two years ago, then it’s fairly likely that this is not original content,” says DW’s Spangenberg. “If it claims to be from a news event that’s happened yesterday – a plane crash, say – but you can find that video with the same shots elsewhere, it’s unlikely the video really is from yesterday, and they’ve taken an old one and tried to fool the world.”

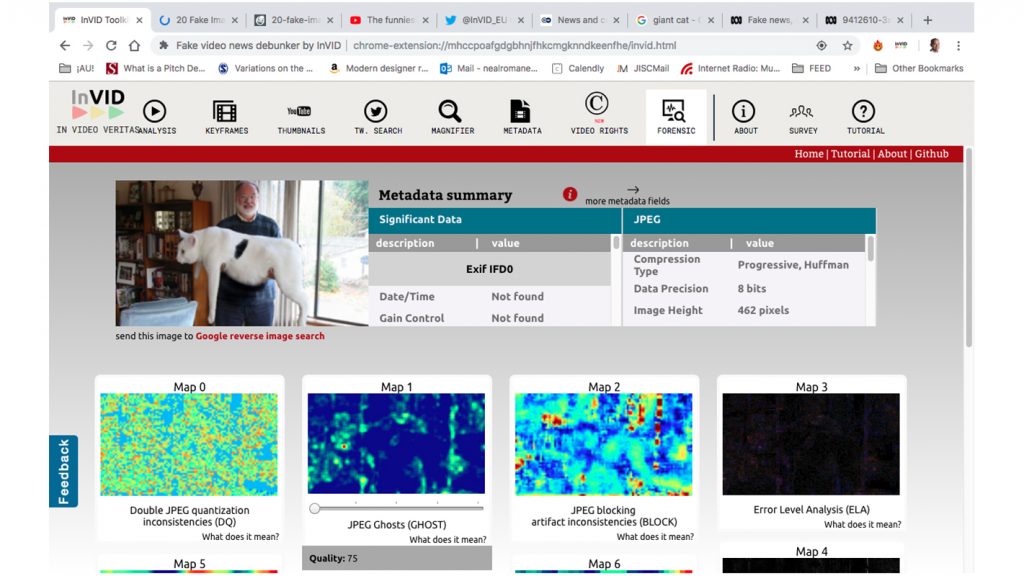

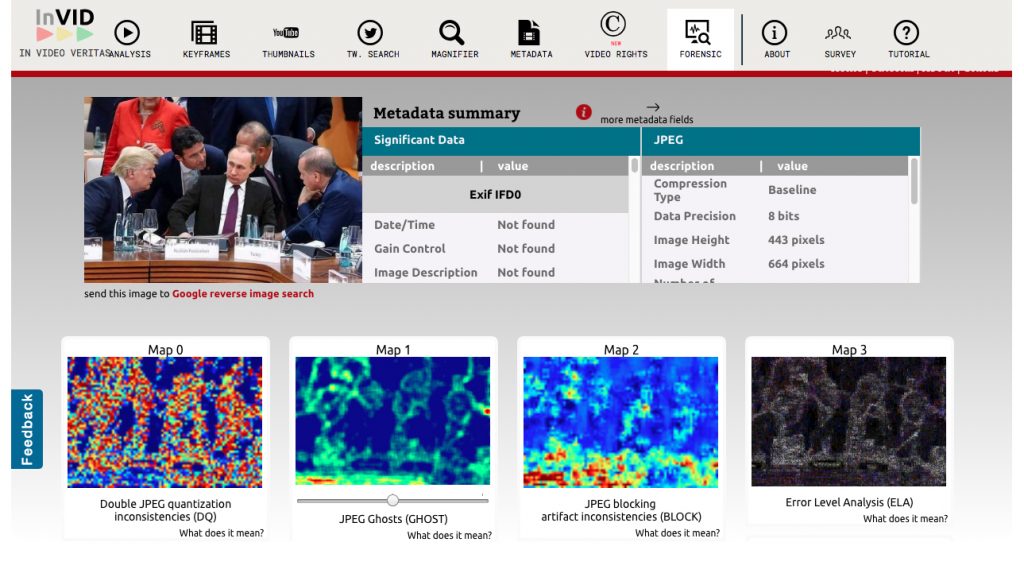

InVID offers some impressive image and video keyframe analysis tools as well. The simplest is a basic image inspection enabling users to magnify, sharpen, flip or apply bicubic interpolation to an image. At the other end is InVID’s Image Verification Assistant, a forensic analysis tool, particularly valuable in detecting fabrication or manipulation of material. When given an image to verify, the tool offers multiple types of forensic feedback, including detection of JPEG ghosts, double JPEG quantisation and JPEG blocking artefact inconsistencies, high frequency noise, and CAGI analysis. These tools can be highly effective in detecting manipulation, editing, and extra compositing in images or video keyframes.

The InVID consortium has also been working on tools for automatic logo detection, useful for helping journalists locate the producers of a video or image, whether it was branded by a news agency or a terrorist group. And the InVID plug-in features a new rights and copyright status checker, still in the development stages.

Media partners

By last count, the InVID plug-in had 5900 users, with fast and wide adoption by the journalistic and verification communities. The only other tool comparable, says Spangenberg, is Amnesty International’s YouTube DataViewer, which extracts video metadata and keyframes, and offers a keyframe Google image search.

The InVID project is coordinated by Dr. Vasileios Mezaris of the Greek research organisation CERTH (Centre for Research and Technology Hellas) with CERTH researchers developing some of the individual components of the InVID toolkit. The InVID developers are also working with partners to develop wider applications for the tools. Consortium partner Condat AG, based in Berlin, is creating a verification plug-in for the Annova Open Media newsroom content management system. Condat is an Annova reseller.

The commercialisation of the InVID tools is subject to negotiations among the consortium.

“If the project partners are doing something that they then want to sell on the market, there has to be some agreement on how it is marketed and how revenues are shared,” notes Spangenberg. “For example, because it is a cooperative project, if Condat wants to market and sell its add-on, there has to be an agreement under which it can be used – a licence agreement or revenue sharing model.”

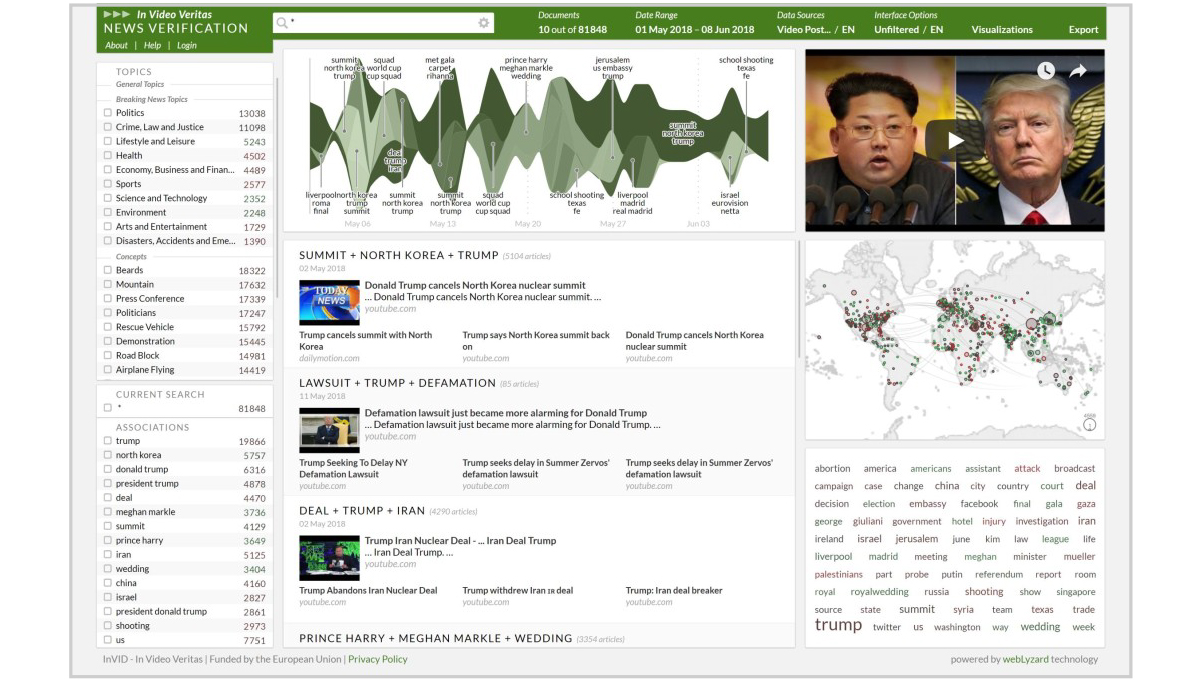

Vienna-based company and consortium partner Web Lyzard is also helping to develop a dashboard for social media analysis of trending topics, using the InVID platform.

Broadcasters AFP and Deutsche Welle provide feedback and direction on the journalistic applications for the research.

InVID offers some impressive image and video keyframe analysis tools as well. The simplest is a basic image inspection enabling users to magnify, sharpen, flip or apply bicubic interpolation to an image. At the other end is InVID’s Image Verification Assistant, a forensic analysis tool, particularly valuable in detecting fabrication or manipulation of material. When given an image to verify, the tool offers multiple types of forensic feedback, including detection of JPEG ghosts, double JPEG quantisation and JPEG blocking artefact inconsistencies, high frequency noise, and CAGI analysis. These tools can be highly effective in detecting manipulation, editing, and extra compositing in images or video keyframes.

The InVID consortium has also been working on tools for automatic logo detection, useful for helping journalists locate the producers of a video or image, whether it was branded by a news agency or a terrorist group. And the InVID plug-in features a new rights and copyright status checker, still in the development stages.

Media partners

By last count, the InVID plug-in had 5900 users, with fast and wide adoption by the journalistic and verification communities. The only other tool comparable, says Spangenberg, is Amnesty International’s YouTube DataViewer, which extracts video metadata and keyframes, and offers a keyframe Google image search.

The InVID project is coordinated by Dr. Vasileios Mezaris of the Greek research organisation CERTH (Centre for Research and Technology Hellas) with CERTH researchers developing some of the individual components of the InVID toolkit. The InVID developers are also working with partners to develop wider applications for the tools. Consortium partner Condat AG, based in Berlin, is creating a verification plug-in for the Annova Open Media newsroom content management system. Condat is an Annova reseller.

The commercialisation of the InVID tools is subject to negotiations among the consortium.

“If the project partners are doing something that they then want to sell on the market, there has to be some agreement on how it is marketed and how revenues are shared,” notes Spangenberg. “For example, because it is a cooperative project, if Condat wants to market and sell its add-on, there has to be an agreement under which it can be used – a licence agreement or revenue sharing model.”

Vienna-based company and consortium partner Web Lyzard is also helping to develop a dashboard for social media analysis of trending topics, using the InVID platform.

Broadcasters AFP and Deutsche Welle provide feedback and direction on the journalistic applications for the research.

“Our role in this at Deutsche Welle is fairly small in terms of the development itself,” says Spangenberg. “What we have done is formulate the requirements from a media and news organisation perspective. When a new version of the tools comes out, we give it to journalists and ask them to test it, play around with it and give feedback. In total, we will have had nine of these cycles of development, validation, and redevelopment.”

Dangerous fakes

The toolkit has long since left the lab and is being practically employed around the news and verification sectors – and none too soon, some would argue, as the digital information that we’re continually exposed to becomes increasingly unreliable.

“It’s an arms race, in a way,” says Spangenberg. “One of the prime examples is the Obama video of a completely faked speech, voiced by the actor Jordan Peele. The first time I saw it, I thought, ‘That’s weird language Obama’s using’ and then, toward the end, you see Jordan Peele on the other half of the screen. But if you don’t know what’s possible, then I think people can very easily be fooled.

“They did some work on this deep fakes technology at the Max Planck Institutes with mapping of faces, and that was in 2016, almost three years ago. I think it’s only going to become more sophisticated when it comes to media manipulation. Some people could just be doing it for research, but others are doing things with bodies and faces merged with other content to make people appear to do things that they’ve never done. That can go viral very quickly, and can be very dangerous in terms of reputation and many other things.”

“Our role in this at Deutsche Welle is fairly small in terms of the development itself,” says Spangenberg. “What we have done is formulate the requirements from a media and news organisation perspective. When a new version of the tools comes out, we give it to journalists and ask them to test it, play around with it and give feedback. In total, we will have had nine of these cycles of development, validation, and redevelopment.”

Dangerous fakes

The toolkit has long since left the lab and is being practically employed around the news and verification sectors – and none too soon, some would argue, as the digital information that we’re continually exposed to becomes increasingly unreliable.

“It’s an arms race, in a way,” says Spangenberg. “One of the prime examples is the Obama video of a completely faked speech, voiced by the actor Jordan Peele. The first time I saw it, I thought, ‘That’s weird language Obama’s using’ and then, toward the end, you see Jordan Peele on the other half of the screen. But if you don’t know what’s possible, then I think people can very easily be fooled.

“They did some work on this deep fakes technology at the Max Planck Institutes with mapping of faces, and that was in 2016, almost three years ago. I think it’s only going to become more sophisticated when it comes to media manipulation. Some people could just be doing it for research, but others are doing things with bodies and faces merged with other content to make people appear to do things that they’ve never done. That can go viral very quickly, and can be very dangerous in terms of reputation and many other things.”

I’m sometimes stunned by how few people know how to use basic internet techniques like a reverse image searchSo how do we make good decisions in a world where you can no longer believe your eyes? Spangenberg thinks the most important first step is media literacy, including developing critical thinking for children in early education. “We also need to teach them how to use technology – not using their smartphones, because that’s all kids do – but what’s underlying the technology, how it works, what it can lead to and what people use it for – for good, for bad, for ugly. “We should also train them to use tools for verification. I’m sometimes stunned by how few people know how to use basic internet techniques like a reverse image search, where you can easily use Google to see if and where a similar image is available elsewhere on the internet. A lot of people don’t know how to do this so, instead, they just share images that look real and suit their world view. “Very few journalists are really experts when it comes to knowing their tools for verifying digital content. It’s definitely not mainstream yet. People have to learn it. We’re not at that point where every journalist, no matter what age, knows exactly what they have to do to verify any item you give them.” The term “mainstream media” has become a blanket slur against a whole industry which some feel has lost touch with the facts of people’s lives. As local news sources dry up and news media is centralised in national urban centres, people start to find news sources that speak to them more personally and directly – and not all of these may have their best interests at heart. Spangenberg believes that a proven dedication to the truth may be one remedy. “This is all about trying to find out who, what, where, when, why. We’re having this trust crisis. These are difficult times for established media. Some are turning away, some are turning against it, but there are some in the middle still and you have to try to win some of them back. How do you do it? You have to show that you’re doing your best and you’re trying to get to the bottom of things, to get to the truth of the matter.” ]]>