Something New at the Royal Wedding

Posted on Feb 12, 2019 by FEED Staff

Sponsored editorial

Audiences love a love story, especially one that ends in a wedding. In May, one young couple’s nuptials became the UK’s biggest media event. The excitement around the wedding of Prince Harry and Meghan Markle extended well beyond the UK – almost 40 million Americans watched the coverage. And the TV viewing figures for the marriage of Harry’s older brother, William, and Kate Middleton in 2011 were even larger. A royal wedding is guaranteed to bring in viewers.

Unlike a sporting event whose broadcast rights might be carefully licensed for each territory, a public spectacle like this year’s royal wedding allows multiple broadcasters to compete for a slice of the viewership pie. The competition forces each broadcaster to up the ante in terms of quality and innovation.

Sky has always tried to push the envelope in TV technology. The company was an early pioneer of 3DTV and though the format never caught on with viewers, Sky’s research in production and distribution had a ripple effect throughout the industry. It is now looking at HDR content, as well as increasing its 4K offerings, and has announced plans to create a new Innovation Centre at its Osterley, London campus which will be the home of its new Get into Tech for Young Women programme.

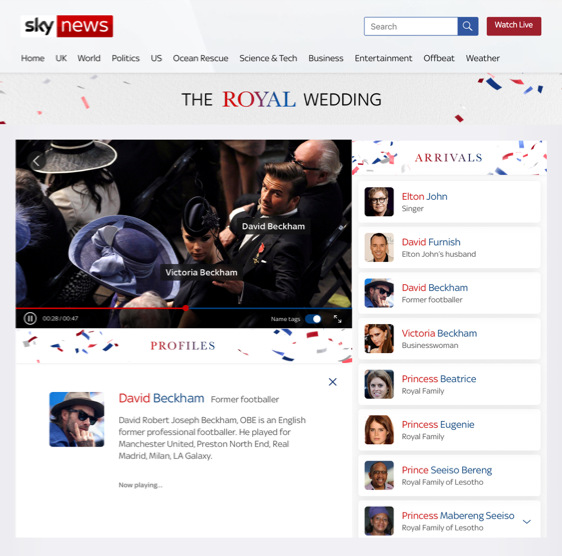

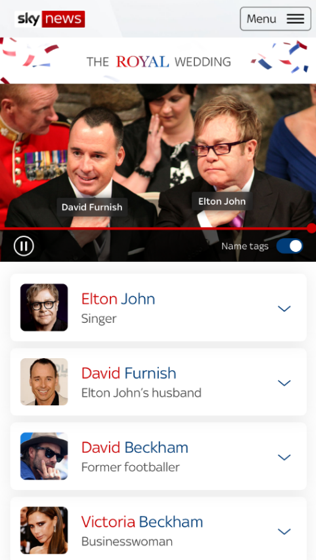

For the marriage of Harry and Meghan, Sky News leveraged the power of artificial intelligence to create a live-streaming app which allowed viewers to identify the wedding guests and participants in real time.

Who’s Who?

The Sky News Royal Wedding: Who’s Who Live application was powered by machine-learning enhanced video. The live-streaming experience gave users an insider’s look at guest arrivals, with the machine-learning infrastructure to detect guests in real time as they appeared on screen. The app offered users a steady stream of facts and insights about each arriving guest, including their connections to the royal couple and was used by half a million people in more than 200 countries via mobile devices and web browsers.

.

A video-on-demand version was published after the wedding. It allowed users to navigate the roster of guests with integrated pause, rewind and restart.

Sky News designed and built its machine-learning functionality in only three months. The team built the service using cloud infrastructure, for speed of development and to assure access to the scalable machine-learning capabilities essential to the app’s core functionality of real-time guest identification.

The project combined cloud video infrastructure from AWS with GrayMeta’s data analysis platform and UI Centric’s interactive UI design. Amazon’s Rekognition video and image analysis service was used for the real-time identification of celebrities and metadata tagging with related information, integrating a user experience

in collaboration with UI Centric.

Rekognition allows users to add image and video analysis to applications by providing image or video to the Rekognition API. The service can identify objects, people, text, scenes and activities, or even detect flagged or inappropriate content. The cloud-based tool also provides accurate facial analysis and recognition.

.

“Sky has always tried to push the envelope in TV technology.”

.

Capturing the Guests

A video feed from an outside broadcast van located near Windsor Castle’s St George’s Chapel captured faces of arriving guests and fed the raw signal to an AWS Elemental Live small form-factor appliance located nearby, which started the real-time ingest into the cloud-based workflow. Video processing was handled with the AWS Elemental MediaLive service. The AWS Elemental MediaPackage integrated digital rights management for secured distribution over the Amazon CloudFront content delivery network. Sky News also used the AWS Elemental MediaPackage service

for live-to-VOD applications such as catch‑up TV.

The Amazon CloudFront content delivery network was used to unify the content for faster distribution to viewers. The enhanced video and metadata were delivered to the front-end application and video player designed and developed by UI Centric specifically for this application.

Royal ROI

Information-rich content like that provided by Sky’s Who’s Who Live app will become increasingly common – even essential – for providers and broadcasters looking to capture more eyeballs. It’s not hard to imagine a service or app that tells you not only who is present at an event, but whose designs they’re wearing and what vehicle they’re driving, and what the prominent buildings and landmarks are at a scene, along with links to more information or opportunities to add items to your wish list or shopping cart.

Sky is looking ahead to other types of events where machine learning can deliver improved ROI. With live events being a key differentiator for broadcasters, and new, sophisticated tools now widely available,

we can look forward to increasingly dynamic video innovations accompanying big live events.

.