A Video Compression Primer

Broadcasters, pay TV operators and OTT services must deliver video to every type of device now, from big HD and 4K televisions to mobile phones and tablets. The caveat: audiences expect video to look just as good on the smallest device as it does on the largest one.

Today’s TV shows are shot at the same quality as feature films, quality that translates into a data rate that could be as high as 5TB an hour or more. Those files are far too large for even the fastest Internet connection to deliver consistently, and most consumer devices aren’t designed to play back these video source formats.

So how do you stream premium content to viewers on smart TVs, mobile phones, or tablets – whether those devices are connected to Gigabit Ethernet, a cellular network, or any connection speed in between the two?

The answer: compress video files into smaller sizes and formats. The dilemma: enabling high-quality playback of these files on every possible type of screen.

Codecs are the key to addressing size, format and playback requirements. A codec allows you to take video input and create a compressed digital bit stream (encode) that is then played back (decoded) on the destination device.

There are two types of codecs: lossless and lossy. Lossless compression allows you to reduce file sizes while still maintaining the original or uncompressed video fidelity. Digital cinema files are generally encoded in a lossless format. The video files that you watch on your smart TVs and personal devices are all encoded with a lossy codec, which means taking the original file and reducing as much of the visual information as possible while still allowing the image to look great. All streaming video is encoded using lossy codecs.

There are many different video codecs available – including proprietary ones, algorithms that have been developed by a company and can only be played back on devices made by that company or by licensing its technology. More common are open-source or standards-based codecs. These are typically less expensive to use and don’t require content owners to be locked into a closed system.

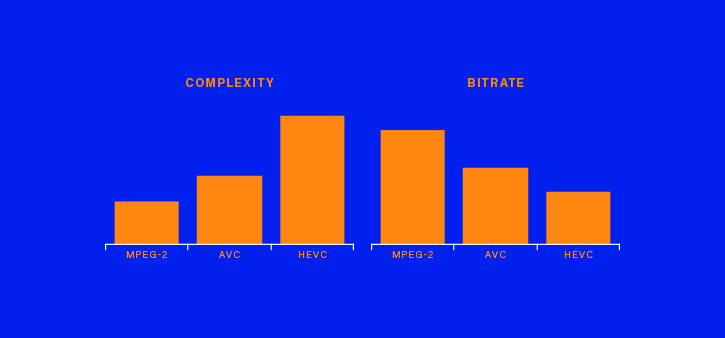

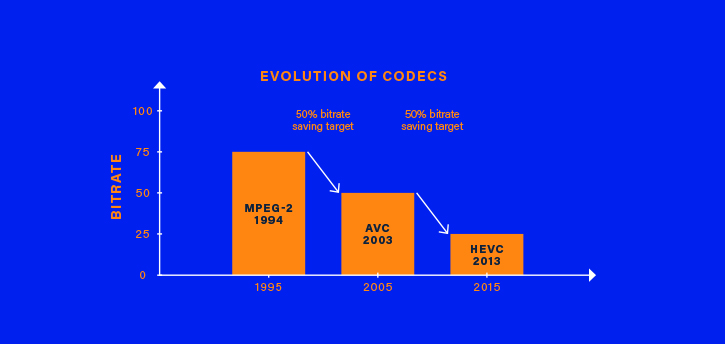

Today’s most popular standards-based codecs for video streaming are AVC/H.264 and HEVC/H.265. Broadcasters and pay TV operators sometimes still use MPEG-2, which is more than 20 years old. The crucial goal in codec development is to achieve lower bitrates and smaller file sizes while maintaining similar quality to the source.

Until fairly recently, most video encoding was performed using specially built hardware chips. AWS Elemental pioneered software-based encoding, which runs on off-the-shelf hardware.

How video compression works

To reduce video size, encoders implement different types of compression schemes. Intra-frame compression algorithms reduce the file size of key frames (also called intra-coded frames or I-frames) using techniques similar to standard digital image compression. Other codec algorithms apply interframe compression schemes to process the frames between key frames.

For standards-based codecs, AWS Elemental builds and maintains its own algorithms that it uses to analyse I-frames and the differences in subsequent frames, storing information on the changes between these frames in predicted frames (P-frames) and processing only the parts of the image that change. Bi-directional predicted frames (B-frames) can look backward and forward at other frames and store the changes.

Once compression has been applied to the pixels in all the frames of a video, the next parameter in video delivery is the bitrate or data rate – the amount of data required to store all the compressed data for each frame over time, usually measured in kilobits or megabits per second (Kbps or Mbps).

Generally speaking, the higher the bitrate, the higher the video quality – and therefore, the more storage required to hold the file and the greater the bandwidth required to deliver the stream.

“All streaming video is encoded using lossy codecs.”

Live and VOD encoding

AWS Elemental compression products are divided into two different categories – live encoders and video-on-demand encoders. Live encoders take in a live video signal and encode it for immediate delivery, in cases such as a live concert or sporting event, or for traditional linear broadcast channels.

While live encoders are limited to analysing and encoding content in real time, VOD encoders that use file-based media sources are not restricted to processing the content in real time. VOD encoders may take multiple passes at the video, analysing it for motion and complexity. For example, explosions in an action movie might require more effort to encode than a talking-head interview.

Constant bitrate (CBR) encoding encodes the video at a more or less consistent bitrate for the duration of a live stream or entire video file. Variation occurs because different frame types will have different data rates, and because buffers on clients allow a small amount of variability in the bitrate during playback. Variable bitrate (VBR) encoding means the video bitrate can vary greatly over the video’s duration.

A VBR encode spreads the bits around, using more bits to encode complex parts of content (like action or high-motion scenes) and fewer bits for simpler, more static shots. In either CBR or VBR encoding, the bitrate target is followed as closely as possible, resulting in outputs that are very close in size.

Overall, especially at lower average bitrates, VBR-encoded video is going to look better than CBR-encoded video. Another factor with VBR is that the encoder needs to be configured so that the peak data rate isn’t set so high that the viewing device can’t process the stream or file.

Quality-defined variable bitrate encoding (QVBR) – a new encoding mode from AWS Elemental – takes VBR one step further. For live or VOD content, QVBR brings sophisticated algorithms to consistently deliver the highest-quality image and the lowest bitrate required for each video. Simply set a quality target and maximum bitrate, and the algorithm determines the optimal results no matter what type of source content you have (high action, talking heads, and everything in between).

Bit by Bit Despite the evolution of codecs over the last 20 years, some broadcasters are still using MPEG-2 with its high bitrate

.

Optimising quality

For premium content, such as a television series or movie, AWS Elemental Server on-premises or AWS Elemental MediaConvert on the AWS Cloud encodes video files to the highest quality. On-demand services may employ a fleet of AWS Elemental Servers, or just use the auto-scaling MediaConvert service to process VOD assets.

The codec of choice for delivering video evolves every few years and each advance brings with it improved video quality at lower bitrates. At the same time, screen sizes and resolutions get bigger, so it takes considerable computing power to generate compressed files with high video quality.

Until recently, H.264 (also known as AVC) was the best codec for optimising quality and reducing file sizes; many content publishers still use it today. But it’s quickly giving way to HEVC: High-Efficiency Video Coding. It’s also referred to as H.265; different standards groups employ different designations to refer to the same standard.

HEVC requires more computing power than H.264, but it’s more efficient. As AWS Elemental writes its encoding software from scratch, rather than relying on off-the-shelf encoding software, it can take the efficiency gains in each new generation of codec and adjust the software accordingly to get the best quality and/or smallest video file sizes, as well as the shortest encoding times.

]]>