Vionlabs: Right on time

Posted on Apr 9, 2019 by FEED Staff

We sat down with the team at Vionlabs, a company using AI in remarkable ways to get the right content to the right viewer at the right time

“Almost all the services we talk to now are looking at the content recommendation space, trying to understand which parts of it are strategically important and which parts of it they need to outsource,” says Patrick Danckwardt, head of global business development at Swedish content discovery company Vionlabs. “Most of them have tried the pure metadata approach and have found that it didn’t really move the needle. It doesn’t mean that the algorithms are bad, it just means that the metadata they’ve been using hasn’t been strong enough. When we talk to them, they say: ‘We’ve already tried that. It didn’t work.’ But once we can show them how we are really going in depth with data, that’s when we spark their interest.”

Stockholm-based Vionlabs is looking at the algorithms used for video recommendations in audaciously new ways. We are all familiar with film viewing recommendations, or suggestions for a product in a targeted ad that seem ridiculously misattuned: “When these digital platforms seem to know so much about me, how can they get my tastes so completely wrong?”

Much of the problem boils down to good old GIGO and to what Vionlabs sees as an overly simplistic way of analysing content data and customer sentiment.

Pop the Bubble By truly understanding the pulse of the viewer, it is possible to make different recommendations based on the time of day and their emotional state, rather than relying on keywords only

Getting Recommendations Right

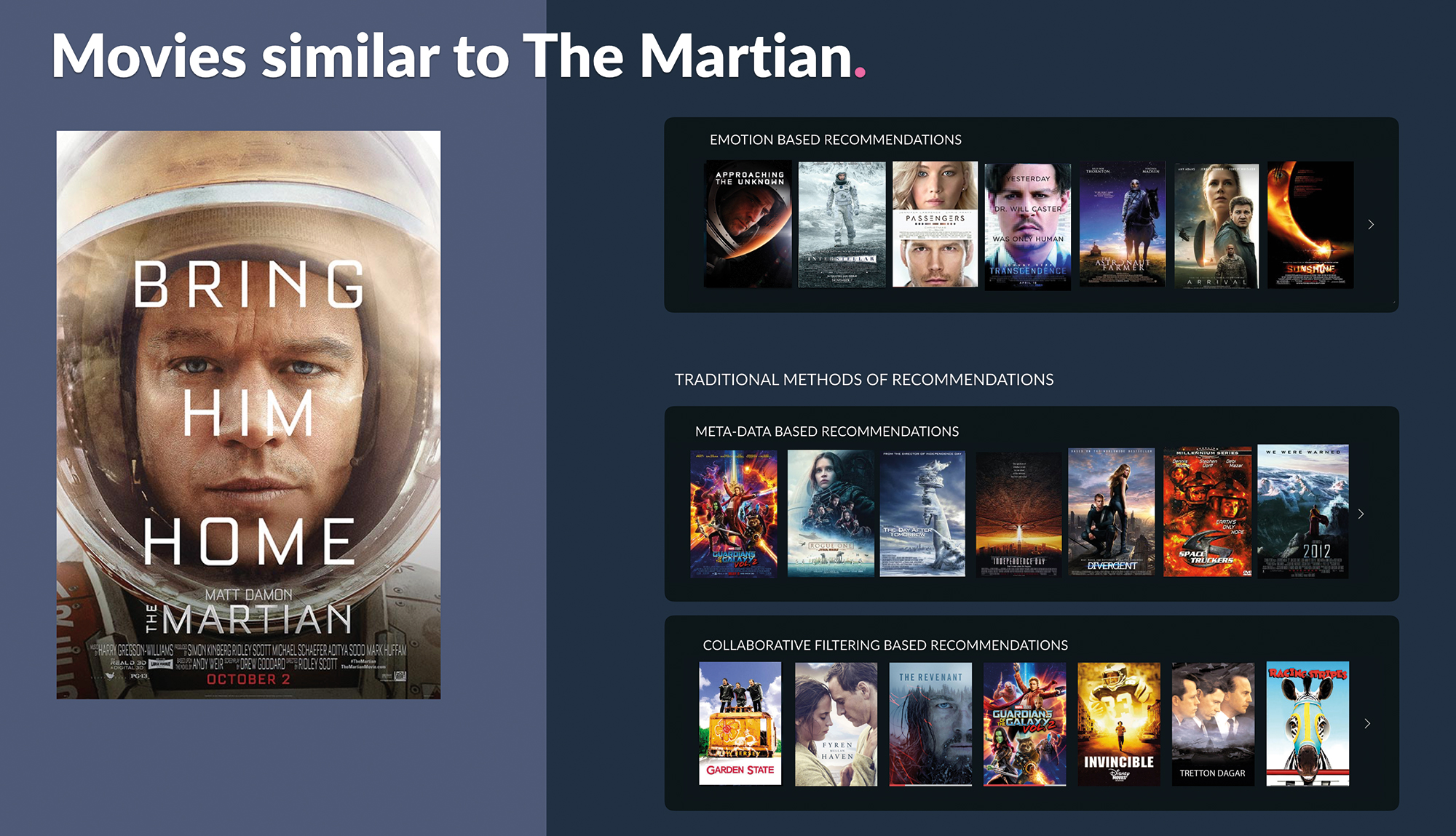

The most basic method of content recommendation is metadata-based recommendation, which is the one most prone to the foibles of human error. This relies on comparisons made between the metadata that has been tagged to each film. Some metadata might have been carefully and thoughtfully constructed. It may have even been built with machine learning, cataloguing faces and elements in the film itself. Or it may have been typed in by a low-paid data jockey, who has 1000 films to catalogue and a few lines of ad copy as a guide. Or maybe there’s no metadata at all.

The metadata content recommendation approach might note that you’ve enjoyed a film that features a car chase, and will then conclude you want more films with car chases. You now have a string of recommendations which include Terminator 2, The Fast and The Furious, 2 Fast 2 Furious and Smokey and the Bandit. If the content on the platform has been blessed with a deep and complex set of metadata, the results in this kind of recommendation can be perfectly adequate. Other times, the connections need some detective work before they become clear (“Ah, the reason I was recommended Steel Magnolias is because my favourite movie, The Deer Hunter, has steel workers in it).

A second approach is collaborative filtering-based recommendation. This is the one where the preferences of multiple users are compared, and results in recommendations of content that other people who have profiles similar to you have liked. In theory, the accuracy of collaborative filtering recommendations gets more refined the more data the system is exposed to. Your recommendations may include something like: “Other people who liked Alien, also liked Blade Runner.” Or “Other people who liked Alien also liked Schindler’s List, or “Other people who liked Alien also liked La La Land”. Some of these may seem out of the blue, some absurdly obvious, but occasionally there is the opportunity to be exposed to something you might not have thought of, but might really like.

Vionlabs suggests a more advanced way of recommending content – emotion-based recommendations. The company has developed a methodology for evaluating content based on the emotional impact the content has on the audience.

Feeling Emotional Vionlabs evaluates content based on the emotional impact it has on the audience

Emotional Response

“We’re teaching the computer how people respond to imagery and sound,” explains Vionlabs founder and CEO Arash Pendari. “We taught our computers by listening to and looking at video clips based on the rating system we have built ourselves. We tell them that we think one clip is positive, one clip is negative, one is stressful, one is not stressful, and the machine learning picks up those patterns after a while. That takes all this to the next level.”

We’re teaching the computer how people respond to imagery and sound.

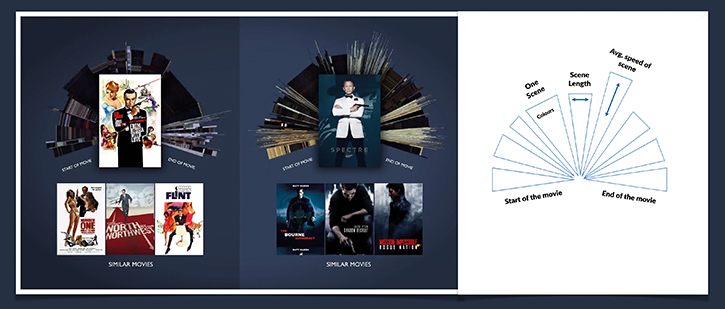

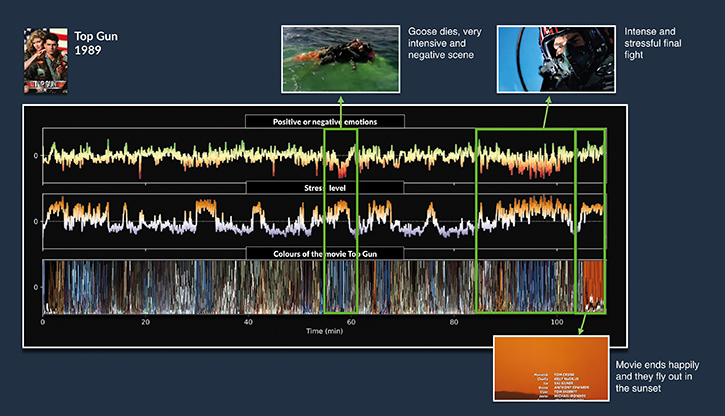

The method also takes into account the colour palette and the level of movement in each scene, and Vionlabs has produced a fascinating set of motion graphics that give a visual fingerprint of each film. This results in clusters of recommendations becoming more accurate, and also depends on fewer datapoints to produce.

“There is so much rich data in the audio, too, that people don’t think about,” continues Pendari. “When you combine the audio with the visual elements, it’s pretty easy to pick up the actual emotion going on in a scene. There may be a lot of red in a scene, but is that because it’s a horror movie or a Christmas movie? Audio data can determine which it is pretty quickly.”

The company has been been working to efficiently analyse the entire dramatic flow of a film, graphing parameters like positive vs. negative emotions, audience stress level and colour (see the analysis of Top Gun above).

“Using emotions is better than using keywords for the customer journey, too,” says Pendari. “If you’re only using keywords, you get stuck in a bubble. That’s why you’re stuck in a bubble on Facebook and Google and everywhere else. But if you have emotional data, you understand the pulse of each viewer over time. Your pulse may be that in the morning you don’t watch something above certain average speeds, but in the evening your average speed goes up and you start watching action movies.”

At present, there is no method for Vionlabs to fine-tune the customer journey in real time, so the company has divided up the viewing timeline into four segments – morning, afternoon, evening and night – allowing for different sets of recommendations for each part of the day. But there’s no reason the technology won’t catch up.

“Google changes your results as soon as you do one search. The search you do is being affected by the search you just did. Working that dynamically with data is still very hard with TV operators and the new players in that space.”

Danckwardt adds: “I read an interesting piece recently, which said that if you want to quantify human consciousness, you have to use quantum models. The main difference between quantum models and other models is that the answers depend on the questions. With every question you ask, you will change the answer to all the other questions. If we want to take it all the way, we need to think of how the movies we show now will be affecting the movies that are shown much further down the line.”

Recommendations and Well-Being

The potential for AI in recommendations could very easily extend to being able to offer content based on the viewer’s current emotional state, which could readily be inferred from data gathered from social media, movement and activity, and purchase data in near real time.

“There’s a clear study of anxiety attacks, how they are more likely to occur in the evening,” says Pendari. “So those types of people may not be able to watch dark movies or content later in the day. Recommendations could be made based on what’s best for mental health.

Recommendations could be made based on what’s best for mental health.

“These are the first steps of actually teaching a computer to perceive human emotions, and we’re applying this to the movie and TV industry right now. But I think we do need to be careful when we teach computers human emotion. I like to think there’s a responsibility for us when we work with machine learning to take care in how we drive and how we use it.”

This article originally appeared in the November 2018 issue of FEED magazine.

AI and machine learning archives