Unmasking Deepfakes

Posted on Apr 5, 2024 by FEED Staff

Who can you trust?

Deepfakes are on the rise, with little being done to stop them. Verity Butler examines their damaging impact and what action, if any, is being taken to curb their spread

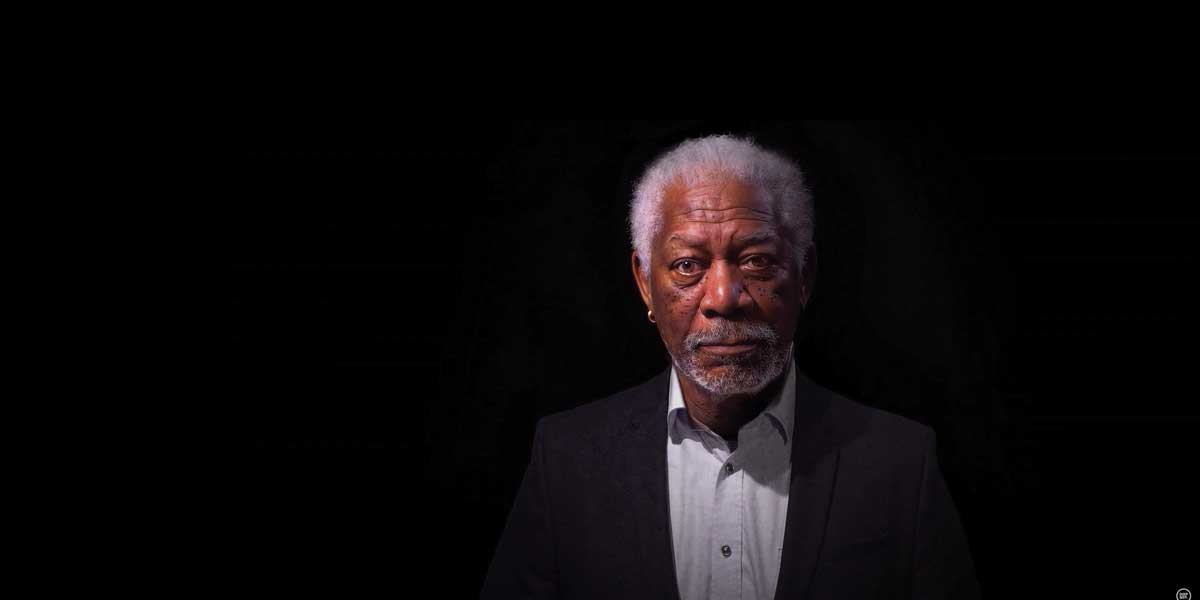

“I am not Morgan Freeman,” introduces Morgan Freeman. “What you see is not real. Well, at least in contemporary terms it is not.”

This YouTube clip, titled: This is not Morgan Freeman – A Deepfake Singularity, appears to show Morgan Freeman, earnestly insisting to viewers in his iconic soothing voice, that he is not in fact Morgan Freeman.

Interestingly, in this deepfake, the subject is at least admitting it has been computer generated – and only serves to demonstrate the convincing capabilities of the phenomenon.

Alas, the conventional purpose of a deepfake is to mislead, misinform and ultimately pose a risk to both society and the overall political welfare of the entire international community.

Bernard Marr is a futurist, influencer and thought leader in the fields of business and technology – with a passion for using technology for the betterment of humanity.

“Deepfakes represent a significant challenge in our digital age, blurring the lines between truth and fiction,” he introduces. “They leverage powerful AI technologies to create convincingly realistic images, video and audio recordings that can be difficult to distinguish from genuine content.”

Deepfakes have proliferated social media, the internet and headlines over recent years.

Though sometimes generated for the purpose of entertainment, deepfakes are usually in the news for reasons more sinister.

Those being anything from large-scale fraud to identity theft, revenge pornography to adult content with the faces of celebrities and public figures stitched over the original video or image.

“I harbour some serious concerns about the misuse of this technology,” Marr emphasises. “The potential for harm – from spreading misinformation to violating personal privacy – underscores the urgency for both heightened awareness and the development of technological solutions to detect and mitigate their impact.”

A multimillion keyboard slip

It’s a safe bet that the average person has at some point had an anxiety dream about something bad or embarrassing happening in the workplace.

However, this first example is a case where, for one unfortunate employee, that nightmare became a horrifying reality.

In February 2024, deepfake technology was used to trick a worker at a Hong Kong branch of a multinational company – conning them out of a tidy $25 million.

The seemingly inconceivable story saw an employee from the company’s financial department receiving a message from a ‘person’ stating they were the company’s UK-based chief financial officer – as reported by the South China Morning Post (SCMP).

This followed with a video call with said CFO and other company employees, all of which turned out to be deepfakes.

According to SCMP, based on instructions during the call, the hoax victim transferred $25.6 million to various Hong Kong bank accounts across 15 transfers.

It was already a week into the scam by the time the employee contacted the company’s HQ and the penny finally dropped.

Hong Kong police haven’t named the company or employees involved, indicating the fraudsters had created deepfakes of meeting participants on publicly available video and audio footage.

The victim of the scammers did not actually interact with the deepfakes during the video conference, confirmed SCMP. Investigations are ongoing, but no arrests have been made.

This highlights how deepfakes have now surged from the bedroom into the boardroom.

A report by KPMG emphasises how business have been slow to act in safeguarding against the growing threat.

“Since social engineering attacks such as phishing and spear phishing typically rely on some form of impersonation, deepfakes are the perfect addition to the corporate cyber criminal’s tool bag,” the report claims.

“Unfortunately, many corporate decision-makers have a very limited understanding of deepfakes and the threat they pose. Most leaders don’t yet recognise that manipulated content is already a problem – rather than an emerging or eventual one – that affects virtually every industry. Even more troubling, many don’t yet believe the business risk is significant.”

Fake it off

Perhaps one of the most discussed use cases in deepfakes is where they have been employed to thieve the face and voice of a celebrity or public figure, using it to generate pornographic content – or deliver a misleading or divisive message.

So far, it seems no amount of money or fame can protect you from falling victim to this, with recent headlines dominated by a set of pornographic deepfake images being widely circulated on X (formerly Twitter) of global superstar Taylor Swift.

For almost a full 24 hours, the offending images invaded the platform, with reports claiming that one image raked in a staggering 47 million views before eventually being taken down.

Something that can protect you, however, is your following. Swift’s fanbase is a force of nature when defending its idol, and the Swifties quickly mobilised to mass report the images – putting a stop to the issue.

There was a sense of public outcry of this invasion of America’s sweetheart’s privacy, with officials at the White House noting it as ‘alarming’.

“The recent incident involving Taylor Swift is a stark reminder of the dark side of deepfake technology,” continues Marr. “Unauthorised deepfakes – especially those that are malicious and infringe on personal rights – highlight the need for robust legal frameworks and ethical guidelines to govern the use of such technologies.

“This incident underscores the potential for deepfakes to cause harm and invade privacy, reinforcing the necessity for both societal and technological responses to protect individuals.”

What happened to Swift also served as a sharp reminder to those previously victimised by non-consensual deepfake porn, who don’t have the capacity to leverage the protection of an army of devoted fans.

In an interview with The Guardian, Noelle Martin detailed how her image-based abuse continues to impact her life and mental health to this day, despite the image being originally generated 11 years ago.

“Everyday women like me will not have millions of people to protect us and take down the content; we don’t have the benefit of big tech companies, where this is facilitated, responding to the abuse,” quotes the article.

“Takedown and removal is a futile process. It’s an uphill battle, and you can never guarantee its complete removal once something’s out there.” Martin goes on to emphasise how it affects everything from your employability and future earning capacity to your relationships – and it’s something she is even required to mention for job interviews in case a potential employer discovers the image through background checks.

Many have deemed the recent Taylor Swift images as an example of a new way for men to control women.

But, as Noelle Martin’s case soberly highlights, there’s not much that’s new about this edition of misogyny.

Fighting AI with AI

Seeing as any response to the problem often overlooks everyday deepfake crime, many have turned to fellow victims to seek ways in tackling the ever-evolving issue.

#MyImageMyChoice is a cultural movement against intimate image abuse, from the creators of the documentary Another Body.

The documentary follows US college student Taylor in her search for answers and justice, after she discovers deepfake pornography of herself circulating online.

She dives headfirst into the underground world of deepfakes and discovers a growing culture of men terrorising women, influencers, classmates and friends.

More than just a cautionary tale about misused technology and the toxicity of the online world, this documentary transforms the deepfake technology weaponised against Taylor into a tool that allows her to reclaim both her story and identity.

The #MyImageMyChoice campaign aims to amplify the voices of intimate image abuse survivors.

Starting as a grassroots project, it has gone on to have an outsized impact – working with more than 30 survivors as well as organisations including the White House, Bumble, World Economic Forum and survivor coalitions.

Notably, the campaign is partnered with Synthesia, the world’s largest AI video generation platform, to safely bring anonymous stories to life with AI avatars.

Synthesia believes in the good AI has to offer, and all content submitted to the site is consensual.

The campaign’s website emphasises: “The problem doesn’t lie with technology, but how we use it.”

Marr complements this idea with a view that when ‘looking to the future, it is likely that deepfakes will become more sophisticated and widespread. However, I believe as awareness grows, audiences will become more discerning, and technologies to detect and counteract deepfakes will improve.”

A problem of pastiche

So far, we have discussed archetypal deepfake examples. But as the sophistication of the technology has increased, it seems the theft of intellectual property as well as physical attributes is on the rise.

It was recently announced that the estate of actor and comedian George Carlin was to sue the media company behind a fake hour-long comedy special which purportedly used artificial intelligence to recreate the late stand-up’s comic style and material.

The lawsuit requested that a judge immediately order podcast outlet Dudesy to take down the audio special George Carlin: I’m Glad I’m Dead, in which a synthesised version of the comedian delivered a commentary on current events.

To emphasise: he died in 2008.

Carlin’s daughter, Kelly Carlin, said in a statement that the creation was ‘a poorly executed facsimile cobbled together by unscrupulous individuals to capitalise on the extraordinary goodwill my father established with his adoring fanbase’.

The lawsuit states: “None of the defendants had permission to use Carlin’s likeness for the AI-generated George Carlin special, nor did they have a license to use any of the late comedian’s copyrighted materials.”

The opening of the special includes a voiceover that names itself as the AI engine used by Dudesy, claiming it had listened to the comic’s 50 years of material and ‘did my best to imitate his voice, cadence and attitude as well as the subject matter I think would have interested him today’.

The lawsuit came shortly after the recent Hollywood writers’ and actors’ strikes, with AI being one of the major points of discussion in their resolution.

“In the broadcast, media and streaming industries, deepfakes pose both rising challenges and opportunities,” Marr continues. “On the one hand, they provide innovative methods in creating content – such as resurrecting historical figures or even enabling actors to perform in multiple languages seamlessly.

“On the other hand, they introduce risks related to misinformation, copyright infringement and ethical concerns. These industries must navigate these waters, balancing innovation with integrity and trust.”

Need a hand?

Thankfully, it’s not entirely doom and gloom.

Along with the growing number of deepfakes has also come an innovative burst of businesses and tools to battle them.

Hand (Human & Digital) is a start-up aiming to bring simplicity and scale to talent identity in a world constantly evolving as both human and digital.

As a B2B SaaS company, Hand’s interoperable talent ID framework enables reliable verification of quantifiably notable real (legal and natural) persons, their virtual counterparts and fictional entities.

“In 14 months, we went from applying to become a registration agency to becoming the same level of a data site like Crossref,” begins Will Kreth, CEO of Hand. “We’re now one of only 12 DOI registration agencies.”

As digital replica usage grows, trusted tools providing vectors of authenticity for talent’s unique NIL (name, image, likeness) are essential.

Authorised by the ISO standard DOI Foundation, Hand’s ID registry meets emerging needs for talent provenance automation in the digital age.

“Whether it’s avatars selling out concerts and making millions of dollars – or the de-aging of Indiana Jones/Harrison Ford into his younger self – synthetic talent and digital replicas are already here. Keeping track of them is not trivial.”

Acting like a barcode, Hand brings global standardisation to the media supply chain, tracking residuals, royalties and participations via talent provenance automation.

“When it comes to deepfakes, it’s going to take a global village to attest to whether or not something was created with Dolly 3 or OpenAI,” highlights Kreth. “What we saw with Taylor Swift and George Carlin is an infringement on name, image and likeness rights.

“We want to be part of the right side of history, so if I’m Idris Elba and my management want to monetise my likeness, I don’t want to see a bunch of rip-off or knock-off versions of me running around cyberspace. It’s about agency, traceability and how you automate the provenance of that value or media supply chain. We come to it from

a media supply chain perspective.”

Ultimately, Hand works to automate and streamline the infringement issues faced by talent affected by deepfakes.

This means the registered ID (formally a DOI handle) could be used to both verify unauthorised uses for takedown notices, and also provide performers a quantifiable mechanism for authorising uses.

It exemplifies an active tool to help alleviate the spread of identity and intellectual property theft – as well as slowing the resultant spread of misinformation.

Who does it come down to?

Though we have identified that moves by individuals are being taken – such as Hand and #MyImageMyChoice – it seems that governing bodies and law enforcement aren’t seeing the threat as dangerous enough yet to roll out a firmer plan of action.

This is despite the fact that these bodies have taken some of the most dangerous deepfake hits themselves.

London mayor Sadiq Khan recently stated that a deepfake audio of him reportedly making inflammatory remarks before Armistice Day almost caused ‘serious disorder’.

According to BBC News, he stressed that the law is not ‘fit for purpose’ in tackling AI fakes, as the audio creator ‘got away with it’.

Khan is one of many politicians that have been targeted, yet there is currently no criminal law established in the UK which specifically covers this kind of scenario.

If one thing is plainly clear, it’s that it’s going to take a global effort to fight this particular version of the misinformation war.

“The development of a savvier audience paired with advancements in AI and regulatory measures will be crucial in mitigating the risks associated with deepfakes,” Marr concludes. “It’s a dynamic interplay between technology, society and policy that will shape the trajectory of deepfakes in the years to come.”

This feature was first published in the Spring 2024 issue of FEED.