Creative AI

Posted on Sep 2, 2022 by FEED Staff

Creative AI tools are finally within reach. Get set for a revolution of machine-assisted content creation

//Words by Neal Romanek//

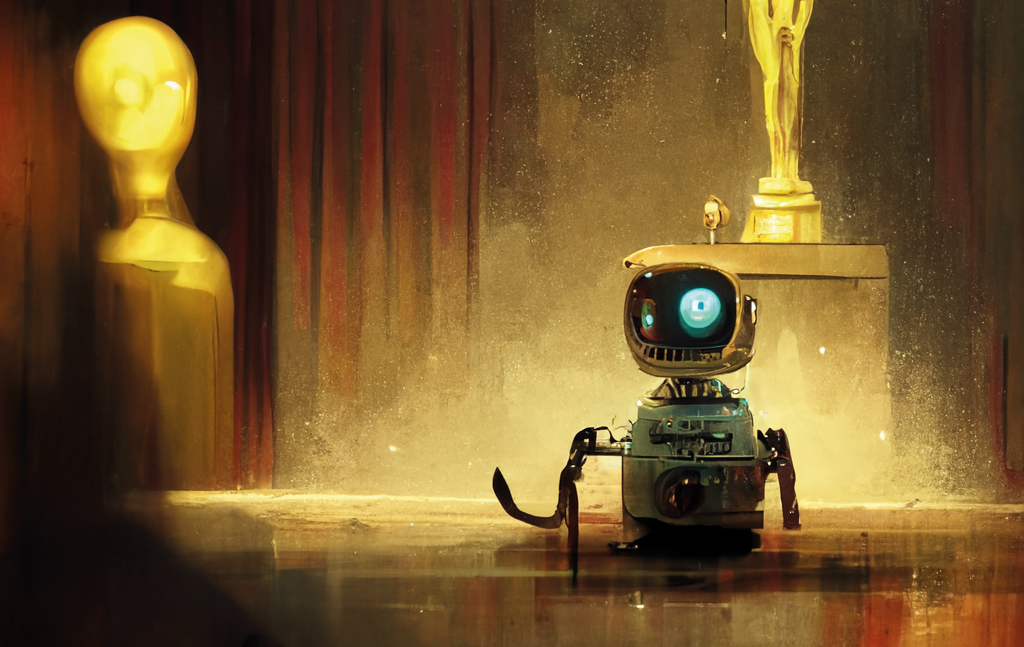

Will robots replace humans? Isn’t that the question that’s always supposed to kick off an article about AI? Of course, that’s the wrong question. The question is not, ‘will AI replace humans?,’ but An unretouched, uncropped image returned by the Midjourney AI in response to the prompt: “Robot accepting an Oscar” (cover) ‘why will humans want to replace other humans with AI?’ The answer is to have more power.

We love a bit of power, and when technology promises we can produce more with less effort, we can’t resist. When we speak of AI today, we’re generally talking about machine learning. This is the use of computers that can adapt and self-correct based on data they receive, mimicking the human brain – or what we theorise the human brain to be. Except they are very different from that. Where the human brain is an organic process, inextricably linked to nature, environment and the physicality of the human body, machine learning is what you might call ‘raw brain power.’ It imitates the trial-and-error problem solving our minds perform.

Applying machine learning to a task is like asking an infinitely obsessive brain to try everything it can and come back with the results. When these are put before you for inspection, you either say ‘yes’ or ‘no’ – or maybe rate them according to how close they come to your specific requirements. The ML algorithm goes back and tries again, taking your feedback on board. It returns with more results, brings them to you once again for approval – whereupon you say ‘yes’ or ‘no’ a further time. Back and forth it goes, repeating the process until it can get remarkably accurate, useful results, or make uncannily precise predictions. Through this process (oversimplified in this example), we now have speech, text and facial recognition, instant translation in any language, cars that almost always understand the difference between a pedestrian and a street sign, and recommendation engines that know what products we need before we even know they’re needed.

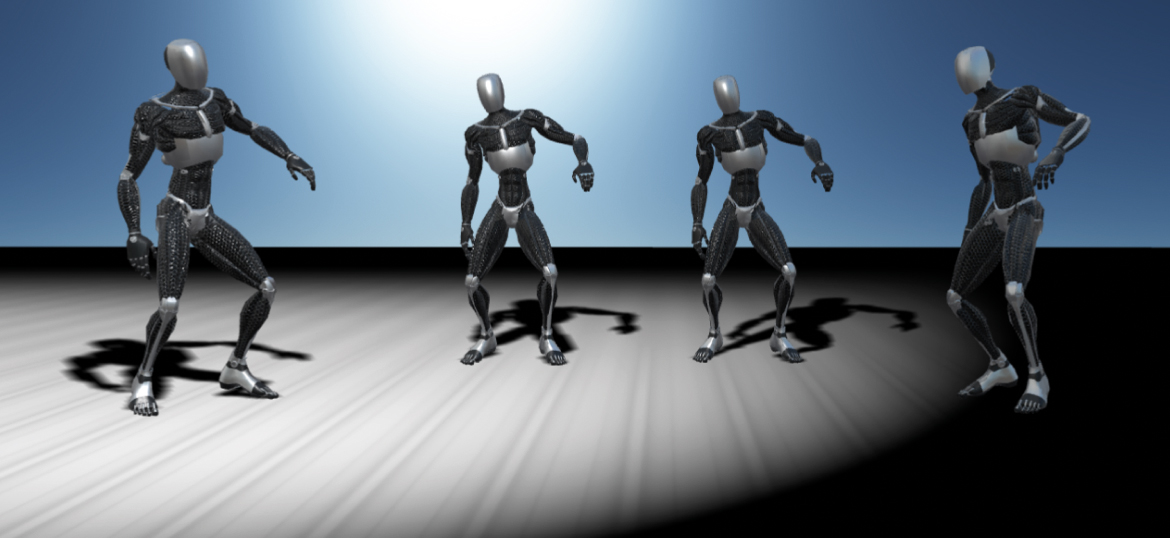

Modelling and animating humans within 3D computer graphics has historically required prohibitively complex technologies

In the media industry, AI and machine learning are used extensively, most notoriously in content recommendation. ML is also making unmanageable databases useful and monetisable, able to identify and tag huge libraries in a fraction of the time it would take a human team. But can AI help us be more creative? Can AI actually make better content?

AI-powered mocap

Radical AI has a solution that uses AI to allow one person to do what once required a whole crew of Weta Workshop technicians – live motion tracking, based only on 2D video input. No ping-pong balls and no leotards. Radical leads with its Core community access, in which the AI is made available at affordable rates, allowing users to upload video via any browser and download FBX animation data. Core has been widely deployed within post-production pipelines by grassroots content makers and developers.

The company’s Radical Live product streams live video to Radical cloud servers, where it is processed by proprietary AI. Animation data is then instantly returned for real-time ingestion into apps, games or other software. Live is also being billed as a ‘real-time multiplayer platform’ – in other words, a metaverse platform. The company also offers an API for enterprises to access its AI directly.

“While human representation is central to nearly all storytelling, it’s also the most difficult and demanding to get right, especially for real-time use cases,” says Gavan Gravesen, founder and CEO of Radical. “Modelling and animating humans within 3D computer graphics has historically required prohibitively complex technologies. To help solve this problem, and accomplish true scale, we started to combine deep learning, human biomechanics and 3D computer graphics into a platform anyone can use. We can achieve near-perfect, natural-looking human animation data from just a single 2D video input, all within a completely hardware- and software-agnostic architecture. That is because our AI studies and learns what actual human motion looks like. It doesn’t use an algorithmic, robotic approximation, but reproduces genuine representations of human skeletal movement over time.”

Radical’s goal of making human motion capture easily available means putting sophisticated animation in the hands of everyday creators and across previously ‘low-tech’ media. Social platforms can be areas of exploration, experimentation and fast iteration for motion-capture content in a way that big, expensive productions could never be. Gravesen is committed to virtual production pipelines and the leverage gained through AI.

“We’ve been developing our AI for virtual production since its inception. To us, this operates across a wide spectrum of features and use cases, from post-production using prototyping and previsualisation, through to live art performances, virtual YouTubing, interactive and immersive social media – even live TV broadcast formats.”

The company has already granted a few development licences to larger next-gen media and entertainment houses. Most of these are well-known creative agencies and production companies specialising in 3D computer graphics, especially within AR/VR.

Radical recently entered into a partnership with 3D design giant Autodesk. With a stated mission of ‘democratising end-to-end production in the cloud for content creators,’ it appears to be building a suite of tools for creating an accessible digital production workflow. Radical’s investment follows on the heels of Autodesk’s acquisition of dailies review platform Moxion and 3D animation pipeline LoUPE.

Translator droids

Tim Jung co-founded his company XL8 with a view to making translation and localisation fast and pain-free, thanks to AI. XL8’s AI-driven localisation engines were built from the ground up for media and entertainment, trained using post-edited ‘golden data’ from some of the world’s top LSPs, with prime studio and broadcast content.

“Our AIs are not, if you will, diluted from other outside sources, as with the big mass translation companies,” says Jung. Its engines are built with extremely low-latency functionality, which allows for live captioning, subtitling and synthetic dubbing into multiple languages at speed. Now broadcasts and livestreaming events can be held on a global level, in virtually real time, without significant expense or delay,” he continues. “This time and cost saving can be realised immediately, then funnelled back into the creative process and quality of production – where it belongs.”

Context-awareness models have advanced to the point of human-like capabilities

For AI to really show off what it can do, it needs to predict – or intuit – and not just translate. XL8 is also bringing to market new developments in the size and accuracy of language models, particularly for translation. Context awareness (CA) is beginning to contribute significantly to quality. Instead of translating sentences on a one-by-one basis, context-aware models feed in information surrounding the source sentence, to interpret subtle nuances of gender, slang, multiple word meanings and colloquial phrases or idioms.

“Context-awareness models have advanced to the point of human-like capabilities. When XL8 recently released its CA models, many translators were surprised and awed by the accuracy of the results.”

Jung sees XL8’s easy translation tech as a game-changer for smaller companies and creators.

“AI/ML is extremely beneficial to smaller companies in the M&E space. An explosion in the FAST/CTV market (free advertising-supported streaming television and connected TVs) in the last few years has created huge bandwidth for content publishing on a global level. But until now, traditional localisation is still very expensive,” he goes on. “As such, smaller content owners and publishers are flying blind into new territories, where their Revshare piece may not even cover the cost of the localisation. On average, traditional subbing and dubbing for two hours of content from English to French costs about $25,000. Multiply that by several languages and it becomes prohibitively expensive for small companies. However, using technologies like XL8, this media can now be localised for under $500 a language, and even less if it’s multiple at the same time.”

Art-ificial intelligence

What about using AI for real creativity, and helping build content? When will we be able to say: ‘AI, one sci-fi thriller with a strong female character and an opening for a sequel please – oh, and in the style of a spaghetti western, too?’

“A tremendous number of research projects have been released in 2022 alone,” says Jung. “Among these are large language models in NLP (natural language processing), such as Google’s Pathways, which can even explain why a given joke is funny; OpenAI’s Dall-E 2 generates images from a text description. The impact this new approach brings to media creation will be fundamental.” C-3PO tells us in Star Wars: “I’m not much more than an interpreter, and not very good at telling stories.” But in Return of the Jedi, he actually does tell a story to a rapt audience – the liar.

We’re going out on a not-very-long limb and saying that AI will be creating moving-picture content, at scale, in the next ten years. There is such demand for content and such unwillingness to pay people to make it, that the only way forward – all things remaining equal – would seem to be AI-generated. In the spring 2022 issue of FEED, we went to some length to show that content was no longer king. In fact, it’s now just fuel to power an attention engine. It doesn’t matter if it’s great; it only has to be something people are compelled to watch.

That’s a pretty dystopian outlook on the future of content, but let’s go back to our original premise – that AI is just there to superpower whatever humans want to do. If we all desire a different, more uplifting moving-image future, AI could help us do that better, too. The past year or two has seen a boom in widely accessible tools for generating complex AI imagery from scratch. That is: tell the AI what you want to see and it will produce it for you.

Nosferatu in RuPaul’s Drag Race

Dall-E, developed at OpenAI by Boris Dayma and Pedro Cuenca, is a neural network that creates images from any text caption. According to the company’s own description: “Dall-E is a 12 billion parameter version of GPT-3 trained to generate images from text descriptions, using a data set of text–image pairs. We’ve found that it has a diverse set of capabilities, including creating anthropomorphised versions of animals and objects, combining unrelated concepts in plausible ways, rendering text and applying transformations to existing images.”

Dall-E was released in a free browser-based version this year, as ‘Dall-E mini.’ It proved staggeringly popular on social media, as people tried virtually every prompt they could imagine, from ‘Nosferatu in RuPaul’s Drag Race’ to ‘Walter White in Animal Crossing’ – or even ‘(insert politician here) suspended over a pool of sharks.’ Time after time, Dall-E would return its best guesses at creating the right image. Results were often spot on, sometimes creative and occasionally revelatory.

Many images generated in Dall-E mini are grotesque, with bizarre, distorted faces and limbs, alongside strange blends of background and subject. But this is part of the appeal. Seeing an AI try to create an image of a human from scratch – based on what it has been told a human looks like in the past – and not getting it quite right gives us a feeling of superiority. Combine this with the charm we feel when we see a child’s drawing of a person that’s just a head with arms and legs.

The real unknown is whether AI can shape live content itself by enabling entirely new forms of creative expression

Dall-E mini was spun off into its own free-to-access brand called Craiyon (craiyon.com), taking developers Dayma and Cuenca with it. In the meantime, OpenAI released Dall-E 2, which generates more realistic and accurate images with four times the resolution of its predecessor. Dall-E 2 is no preschool image generator that you patronise by sticking its drawings on the fridge. This is an AI that reproduces several plausible alternative versions of Girl with a Pearl Earring for you, or seamlessly adds content to a pre-existing picture.

Midjourney

Another image generator built on natural language processing made a splash this year – Midjourney. This released its beta tools to artists, designers and educators, and results were often astonishing. Midjourney could produce intelligently composed images with beautifully rendered subjects, making sophisticated and insightful aesthetic choices.

What makes Midjourney an especially handy tool for creatives is its ability to take direction on board. Giving the prompt: ‘brilliant magazine about video technology in autumn, hopeful future, very detailed’ produces… well, look at the images on the previous pages. Midjourney is not a toy – not just a toy, at least – but an advanced tool for image creation, ideation and design. It also gives useful returns for just about any prompt, including the abstract or philosophical. ‘Where have the days gone?’ will likely offer up a melancholy meditation on time.

Through a list of shortcuts and commands, users can give weight to different elements in the image, employ various seeds, introduce chaos or control, and manipulate styles and quality in very nuanced ways. An ability for artists – as well as developers – to control a sophisticated AI’s output makes tools like Midjourney and Dall-E game-changing.

But for AI to create video content on demand, it must be trained to produce output that exists in time. This starts with executing on prompts like ‘a woman walks across a room.’ Given current capabilities, this is pretty easy, but still requires a level of server time not easily accessible to most. As usual, what starts a technological revolution is not the tech itself, but how easily accessible it is made.

“The real unknown is whether AI can shape live content itself by enabling entirely new forms of creative expression,” muses Radical’s Gravesen. “As is always the case, new aesthetics will need to stand the test of time, to go beyond the gimmick. To us, deep learning and AI are a means to enable artistic expression at greater scale. We judge the success of our AI by its ability to support creators in telling a good story – authentically and reliably. And in that critical sense, yes, AI will help tell stories through live broadcast and streaming formats, just as much as better software and hardware always does.”

This article first featured in the autumn 2022 issue of FEED magazine.