Site visit: Inside InterDigital

FEED visits the labs of a company researching and building the media tech landscape for the next decade

InterDigital doesn’t create products, it creates the future where other products are going to live.

The company, based in Rennes, France, an hour’s drive from Mont-Saint-Michel, specialises in developing technology patents – codecs, formats and processes that can be incorporated into technology actually built by others.

Founded in 1972, the company is deeply embedded in the technologies driving the smartphone revolution and the networks and services around it.

Road trip: InterDigital invited analysts and journalists to tour its Rennes headquarters

As the industry it has traditionally served becomes a leader in content distribution and creation, InterDigital has decided to broaden its scope, and last year acquired Technicolor’s Research & Innovation Unit. The acquisition gives InterDigital a formidable addition to its existing research facilities and the ability to rapidly expand into building future technologies in the intersection of networks and video.

The acquisition also gives InterDigital a working relationship with Technicolor’s production services arm, as well as the media industry it serves.

FEED was invited to visit the InterDigital labs in Rennes to see first hand some of the work being done and to get a glimpse at technologies that most people won’t get to see for another five years.

“Our company was founded two years before the first cell phone call was ever made,” explained Patrick Van de Wille, InterDigital’s chief communications officer.

Now that we’ve moved to InterDigital, short term is five years and long term – I don’t even know what the long term is

“It was founded to do digital wireless research by a high school dropout stockbroker who loved doing two things – selling stocks and hanging out at the beach on the Jersey Shore near Philadelphia, where he was from. He had this idea that he could come up with a device that would enable him to sell stock from the beach.”

The company stockbroker, Sherwin Seligsohn, founded was originally called International Mobile Machines Corporation. It started researching digital telephony back when anything like a mobile phone was “essentially a walkie talkie.”

Throughout the rise of digital mobile technology, InterDigital has been steadily developing advanced technologies used in digital cellular and wireless products, including 2G, 3G, 4G and IEEE 802-related products and is a big researcher in 5G and, according to the company, is a source of more than 30,000 contributions to key global standards.

“InterDigital was like the mammals at the time of the dinosaurs. Now a lot of those giants don’t exist and we’ve evolved,” said Van De Wille during our visit.

Clone wars

FEED was allowed access to the research labs and scientists brought over to InterDigital from Technicolor. The new InterDigital immersive lab is focused on developing immersive media technologies, including virtual and augmented reality, light field imaging and real-time VFX.

Tied up in this nexus of next-generation imaging are technologies that are assuredly the future of communications and entertainment. (Read our discussion with InterDigital Immersive Lab director Gaël Seydoux here.)

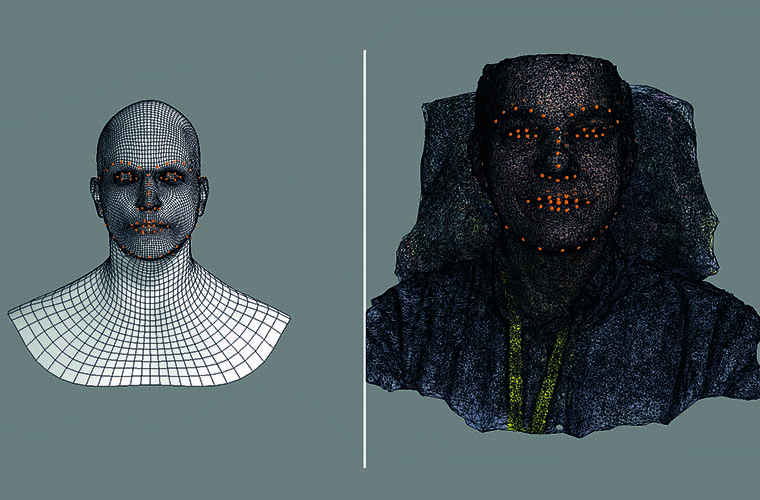

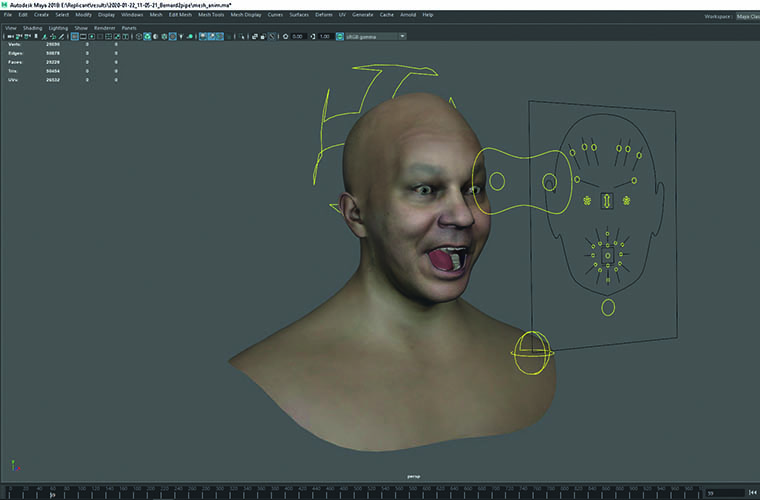

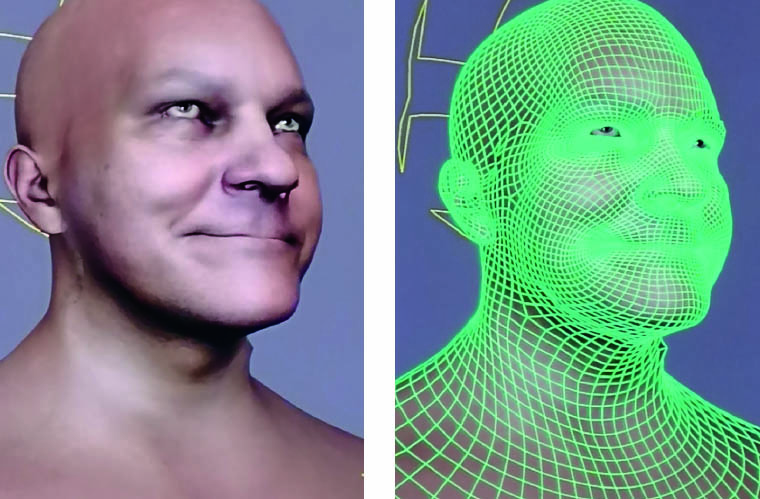

On show in the Immersive Lab was technology that had been employed by Technicolor in its visual effects services, but might now serve as the basis for all future digital interaction. The lab has developed technology for rapidly prototyping a digital duplicate of a human subject. The resulting realistic asset can be animated and manipulated after the fact in real time.

FEED editor Neal Romanek volunteered to enter a wired-up tent, which looked like some construction out of a superhero origin story. However, inside was a well-illuminated chair and an encircling array of DSLR cameras. The capture itself took no longer than a shutter opening and closing, the bulk of the prices was taken up by about 20 minutes of digital stitching and rendering, which resulted in the appearance of an uncannily realistic bust on a monitor, grinning grimacing and blinking as it ran through a series of preset animation cycles.

Mixing it up: An InterDigital researcher demos tech for creating mixed reality on the fly

The digital avatar system, in its current configuration, does not render hair. But adding locks to an avatar is probably only a matter of the resolution of capture, getting the physics right and just sheer processing speed. Fortunately, the hair issue was a moot point for FEED’s editor – the bald head was startlingly realistic.

One can imagine a future where sitting to get your digital avatar done will be as easy as having your passport photo taken. The complex issue will be how that digital avatar might be used in an increasingly complex virtual world.

Gaël Seydoux pointed out that the physical image of a person is only one small part of producing a digital avatar. Attached to it could be large amounts of additional data about the person, as well as motion data. One of the things that makes one person indistinguishable from another is movement, the timings and rhythms of our physicality. These things, according to Seydoux, can be turned into the numbers – the speed at which a person blinks, the sequence of facial movements that occur when a person is feeling an emotion.

We will see a tine when you will have just a single AI in the home that will interface with every room

Seydoux painted a picture of the future of communications: “I can build an avatar with all my behaviour and my expressions, and when I chat to my friend in Australia with my goggles on and I load my avatar and they load theirs, we can be in a convincing virtual space together. I can move around and see him or her from every angle and we talk and there’s a real telepresence.

“I think there’s a market there. We’re building the technology here for that. But there are many technology hurdles still in order to avoid falling into this ‘uncanny valley’ where something doesn’t feel right, but we don’t know exactly why. If I look at my avatar and I don’t believe in one aspect of it, maybe just the way the mouth moves, it’s not going to work.”

Digital DNA

Interdigital’s Imaging Science Lab is run by computer scientists Lionel Oisel, who was also head of research at LA’s Technicolor Experience Center (TEC), which worked to develop practical technologies for VR and immersive storytelling. InterDigital already had a dedicated group working on imaging research, like video compression, but since Oisel’s arrival the lab has grown to more than 80 researchers and now includes research into HDR.

The lab’s work in prototyping, demonstration and research also takes in some of InterDigital’s, which include Technicolor, Phillips and Sony, as well as academic institutions. When Oisel’s team was at Technicolor, its remit was split between researchers who were contracted out to InterDigital’s and Technicolor’s production services business, which largely served the visual effects industry. The move into in InterDigital’s research department has completely changed the way team members think about their work.

“The DNA InterDigital was completely different from the DNA of Technicolor,” said Oisel. “At InterDigital, most people are researchers, with PhDs and research is their main motivation. At Technicolor, research was short term – it claimed to be long term, but long term for Technicolor is one year. That’s really long term for the VFX industry.

“Now that we’ve moved to InterDigital, short term is five years and long term – I don’t even know what long term is. We don’t even know what long term is. We know we won’t have a direct impact today. We’re preparing for the future. That’s the good for researchers and it’s a good way to attract people. InterDigital is all about research and I don’t know too many other companies like that.”

Finding frugal AI

Laurent Depersin heads up InterDigital’s Home Lab, which looks at delivering new technologies to the home, including a whole basket of technologies aimed at benefiting the end customer. These are everything from wireless networking, including 5G video and VR streaming, cloud computing.

Depersin see a lot of promise in machine learning in the suture of video – and not in the unsettling robots vs humans sense that’s producing so much anxiety.

His lab is looking at the possibilities of using AI much closer to the edge devices and in the home. These local AI’s will use less energy and be better at preserving privacy.

The ubiquitous connectivity promised in the future, driven by AI, also promises to consume an unsustainable amount of energy. Rather than AI and machine learning operating in every device in every home and workplace, Depersin sees a single, local AI being used for many purposes.

It takes two: A digital duplicate of the FEED editor

“Now if you have several rooms and you want to use an Amazon Alexa, you need several Alexas,” he says.”But we see a time when you will have just a single AI in the home that will interface with every room and none of that data would have to leave the building.”

InterDigital’s Palo Alto AI lab are working on deep neural model compression, which tries to minimise the amount of memory and processing an AI is using. Meanwhile, Depersin’s lab in Rennes is also working on what is called “organic AI” pr “frugal AI”.

“When you show a kid a picture of a cat, you don’t need to show him several thousand cats so that he can recognise it,” explains Depersin. “This is something we’re still missing with the deep learning approach. It still requires a lot of data for one task. This is the idea of creating an AI that can learn on the go and doesn’t need a huge amount of processing power.”

The time of an all-encompassing virtual reality, where we all interact with each other’s digital avatars in a zero-latency global network driven by personal AIs might seem a few years off, but at InterDigital they’re right around the corner.

Get ready, the future is coming.

This article first appeared in the March 2020 issue of FEED magazine.