Rendering The Impossible

When games engines meet live broadcast, the real and photorealistic are interchangeable.

Tools that enable broadcasters to create virtual objects that appear as if they’re really in the studio have been available for years, but improvements in fidelity, camera tracking and the fusion of game engine renders with live footage has seen augmented reality go mainstream.

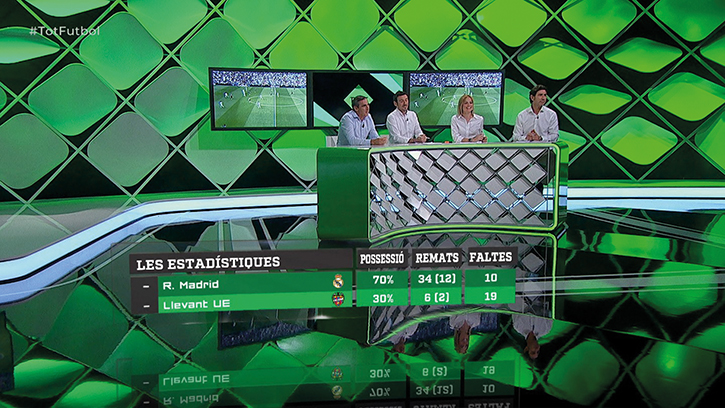

Viva Espana Sports shows in Spain, including Tot Esport and Tot Futbol, use AR systems from wTVision, based in Lisbon

Miguel Churruca, Marketing and Communications director at 3D graphics systems developer Brainstorm, explains: “AR is a very useful way of providing in-context information and enhancing live images while improving and simplifying the storytelling. Examples of this can be found in election nights and entertainment and sports events, where a huge amount of data must be shown in-context and in a format that is understandable and appealing to the audience.”

Virtual studios typically broadcast from a green screen set, but AR comes into play where there is a physically built set in the foreground, and augmented graphics and props placed in front of the camera. Some scenarios might have no physical props at all with the presenter interacting solely with graphics.

“Apart from the quality of the graphics and backgrounds, the most important challenge is the integration and continuity of the whole scene,” says Churruca. “Having tracked cameras, remote locations and graphics moving with perfect integration, perspective matching and full broadcast continuity are essential to providing the audience with a perfect viewing experience.”

The introduction of games engines, such as Epic’s Unreal Engine or Unity, brought photorealism into the mix. Originally designed to quickly render polygons, textures and lighting in video games, these engines can seriously improve the graphics, animation and physics of conventional broadcast character generators and graphics packages.

Viva Espana Sports shows in Spain, including Tot Esport and Tot Futbol, use AR systems from wTVision, based in Lisbon

Miguel Churruca, Marketing and Communications director at 3D graphics systems developer Brainstorm, explains: “AR is a very useful way of providing in-context information and enhancing live images while improving and simplifying the storytelling. Examples of this can be found in election nights and entertainment and sports events, where a huge amount of data must be shown in-context and in a format that is understandable and appealing to the audience.”

Virtual studios typically broadcast from a green screen set, but AR comes into play where there is a physically built set in the foreground, and augmented graphics and props placed in front of the camera. Some scenarios might have no physical props at all with the presenter interacting solely with graphics.

“Apart from the quality of the graphics and backgrounds, the most important challenge is the integration and continuity of the whole scene,” says Churruca. “Having tracked cameras, remote locations and graphics moving with perfect integration, perspective matching and full broadcast continuity are essential to providing the audience with a perfect viewing experience.”

The introduction of games engines, such as Epic’s Unreal Engine or Unity, brought photorealism into the mix. Originally designed to quickly render polygons, textures and lighting in video games, these engines can seriously improve the graphics, animation and physics of conventional broadcast character generators and graphics packages.

Lawrence Jones “We didn’t want to make the characters too photorealistic. They needed to be stylised yet believable”

Virtual Pop/Stars

Last year a dragon made a virtual appearance as singer Jay Chou performed at the opening ceremony for the League of Legends final at Beijing’s famous Birds Nest Stadium. This year, the developer Riot Games wanted to go one better and unveil a virtual pop group singing live with their real world counterparts.

Lawrence Jones “We didn’t want to make the characters too photorealistic. They needed to be stylised yet believable”

Virtual Pop/Stars

Last year a dragon made a virtual appearance as singer Jay Chou performed at the opening ceremony for the League of Legends final at Beijing’s famous Birds Nest Stadium. This year, the developer Riot Games wanted to go one better and unveil a virtual pop group singing live with their real world counterparts.

K/DA is a virtual girl group consisting of skins of the four popular characters in League of Legends. Their vocals are provided by a cross-continental line-up of flesh and blood music stars: US-based Madison Beer and Jaira Burns, who both got their start on YouTube channels, and Miyeon and Soyeon from K-pop girl group (G)I-DLE. It’s a bit like what Gorillaz and Jamie Hewlett have been up to for years, except it’s happening live on a stage in front of thousands of fans. Riot Games tapped Oslo-based The Future Group (TFG) to bring K/DA to life for the opening ceremony of the League Of Legends World Championship Finals at South Korea’s Munhak stadium. The show would feature the real life singers performing their K/DA song Pop/Stars onstage with the animated K/DA characters. Riot provided TFG with art direction and models of the K/DA characters. Los Angeles post house Digital Domain supplied the motion capture data for the group, with TFG completed K/DA facial expressions, hair, clothing, texturing and realistic lighting. “We didn’t want to make the characters too photorealistic,” says Lawrence Jones, Executive Creative Director at TFG. “They needed to be stylised, yet still believable. That meant getting them to track to camera and having the reflections and shadows change realistically with the environment. It also meant their interaction with the real pop stars onstage had to look convincing.” All the animation, camera choices and cuts were pre-planned, pre-visualised and entirely driven by timecode to sync with the music. “Frontier is our version of the Unreal Engine which we have made for broadcast and real-time compositing. It enables us to synchronise the graphics with the live signal frame accurately. It drove the big monitors in the stadium (for fans to view the virtual event live) and it drove the real world lighting and pyrotechnics.” Three cameras were used, all with tracking data supplied by Stype including a Steadicam, a PTZ cam and a camera on a 40ft jib. “This methodology is fantastic for narrative-driven AR experiences and especially for elevating live music events,” he says. “The most challenging aspect of AR is executing it for broadcast. Broadcast has such a high-quality visual threshold that the technology has to be perfect. Any glitch in the video not correlating to the CG may be fine for Pokemon Go on a phone, but it will be a showstopper – and not in a good way – for broadcast.” Over 200 million viewers have watched the event on Twitch and YouTube. “The energy that these visuals created among the crowd live in the stadium was amazing,” he adds. “Being able to see these characters in the real world is awesome.”“K/DA is a virtual girl group consisting of skins of four popular characters in league of legends.”

Pro Wrestling Gets Real

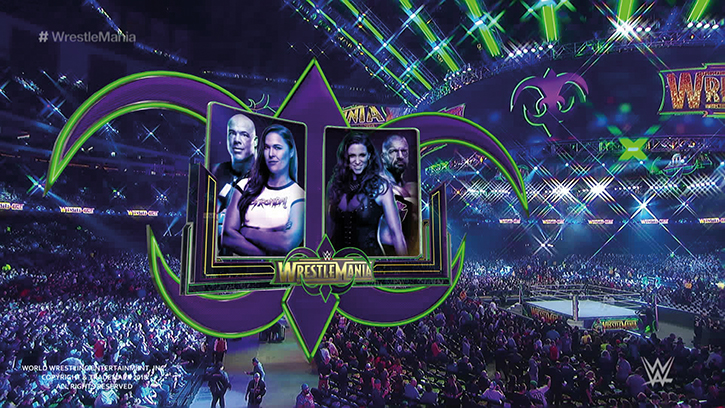

The World Wrestling Entertainment (WWE) enhanced the live stream of its annual WrestleMania pro wrestling event with augmented reality content last April.

The event was produced by WWE, using Brainstorm’s InfinitySet technology. The overall graphic design was intended to be indistinguishable from the live event staging at the Mercedes-Benz Superdome in New Orleans The graphics package included player avatars, logos, virtual lighting with refractions and substantial amounts of glass and other challenging-to-reproduce, semi-transparent and reflective materials.

Using Brainstorm’s InfinitySet 3, the brand name for the company’s top-end AR package, WWE created a range of different content, from on-camera wrap-arounds to be inserted into long format shows, to short self-contained pieces. Especially useful was a depth-of-field/focus feature, and the ability to adjust virtual contact shadows and reflections to achieve realistic results.

Crucial to the Madrid-based firm’s technology is the integration of Unreal Engine with the Brainstorm eStudio render engine. This allows InfinitySet 3 to combine the high-quality scene rendering of Unreal with the graphics, typography and external data management of eStudio and allows full control of parameters such as 3D motion graphics, lower-thirds, tickers,

and CG.

Pro Wrestling Gets Real

The World Wrestling Entertainment (WWE) enhanced the live stream of its annual WrestleMania pro wrestling event with augmented reality content last April.

The event was produced by WWE, using Brainstorm’s InfinitySet technology. The overall graphic design was intended to be indistinguishable from the live event staging at the Mercedes-Benz Superdome in New Orleans The graphics package included player avatars, logos, virtual lighting with refractions and substantial amounts of glass and other challenging-to-reproduce, semi-transparent and reflective materials.

Using Brainstorm’s InfinitySet 3, the brand name for the company’s top-end AR package, WWE created a range of different content, from on-camera wrap-arounds to be inserted into long format shows, to short self-contained pieces. Especially useful was a depth-of-field/focus feature, and the ability to adjust virtual contact shadows and reflections to achieve realistic results.

Crucial to the Madrid-based firm’s technology is the integration of Unreal Engine with the Brainstorm eStudio render engine. This allows InfinitySet 3 to combine the high-quality scene rendering of Unreal with the graphics, typography and external data management of eStudio and allows full control of parameters such as 3D motion graphics, lower-thirds, tickers,

and CG.

Infinity And Beyond: Brainstorm’s Infinity Set creates photorealistic scenes in real time, even adjusting lighting to match the virtual sets

The virtual studio in use by the WWE included three cameras with an InfinitySet Player renderer per camera with Unreal Engine plug-ins, all controlled via a touchscreen. Chroma keying was by Blackmagic Ultimatte 12. For receiving the live video signal, InfinitySet was integrated with three Ross Furio robotics on curved rails, with two of them on the same track, equipped with collision detection.

WWE also continue to use Brainstorm’s AR Studio, a compact version which relies on a single camera on a jib with Mo-Sys StarTracker. There’s a portable AR system too, designed to be a plug and play option for on the road events. The Brainstorm tech also played a role in creating the “hyper-realistic” 4K augmented reality elements that were broadcast as part of the opening ceremony of the 2018 Winter Olympic Games in PyeongChang.

The AR components included a dome made of stars and virtual fireworks that were synchronised and matched with the real event footage and inserted into the live signal for broadcast.

As with the WWE, Brainstorm combined the render engine graphics of its eStudio virtual studio product with content from Unreal Engine within InfinitySet. The set-up also included two Ncam-tracked cameras and a SpyderCam for tracked shots around and above the stadium.

InfinitySet 3 also comes with a VirtualGate feature which allows for the integration of the presenter not only in the virtual set but also inside additional virtual content within it, so the talent in the virtual world can be ‘teletransported’ to any video with full broadcast continuity.

Infinity And Beyond: Brainstorm’s Infinity Set creates photorealistic scenes in real time, even adjusting lighting to match the virtual sets

The virtual studio in use by the WWE included three cameras with an InfinitySet Player renderer per camera with Unreal Engine plug-ins, all controlled via a touchscreen. Chroma keying was by Blackmagic Ultimatte 12. For receiving the live video signal, InfinitySet was integrated with three Ross Furio robotics on curved rails, with two of them on the same track, equipped with collision detection.

WWE also continue to use Brainstorm’s AR Studio, a compact version which relies on a single camera on a jib with Mo-Sys StarTracker. There’s a portable AR system too, designed to be a plug and play option for on the road events. The Brainstorm tech also played a role in creating the “hyper-realistic” 4K augmented reality elements that were broadcast as part of the opening ceremony of the 2018 Winter Olympic Games in PyeongChang.

The AR components included a dome made of stars and virtual fireworks that were synchronised and matched with the real event footage and inserted into the live signal for broadcast.

As with the WWE, Brainstorm combined the render engine graphics of its eStudio virtual studio product with content from Unreal Engine within InfinitySet. The set-up also included two Ncam-tracked cameras and a SpyderCam for tracked shots around and above the stadium.

InfinitySet 3 also comes with a VirtualGate feature which allows for the integration of the presenter not only in the virtual set but also inside additional virtual content within it, so the talent in the virtual world can be ‘teletransported’ to any video with full broadcast continuity.

Augmented Reality for Real Sports

ESPN has recently introduced AR elements to refresh the presentation of its long-running sports discussion show, Around the Horn. The format of the show is in the style of a panel game and involves sports pundits located all over the US talking with the host, Tony Reali, via a video conference link.

Augmented Reality for Real Sports

ESPN has recently introduced AR elements to refresh the presentation of its long-running sports discussion show, Around the Horn. The format of the show is in the style of a panel game and involves sports pundits located all over the US talking with the host, Tony Reali, via a video conference link.

The new virtual studio environment, created by the DCTI Technology Group using Vizrt graphics and Mo-Sys camera tracking, gives the illusion that the panellists are in the studio with Reali. Viz Virtual Studio software can manage the tracking data coming in for any tracking system and works in tandem with Viz Engine for rendering. “Augmented reality is something we’ve wanted to try for years,” Reali told Forbes. “The technology of this studio will take the video game element of Around the Horn to the next level while also enhancing the debate and interplay of our panel.” Since the beginning of this season’s English Premier League, Sky Sports has been using a mobile AR studio for football match presentation on its Super Sunday live double-header and Saturday lunchtime live matches. Sky Sports has worked with AR at its studio base in Osterley for some time but by moving it out onto the football pitch it hopes to up its game aesthetically, editorially and analytically. A green screen is rigged and de-rigged at each ground inside a standard match-day, 5mx5m presentation box with a real window open to the pitch. Camera tracking for the AR studio is done using Stype’s RedSpy with keying on Blackmagic Design Ultimatte 12. Environment rendering is in Unreal 4 while editorial graphics are produced using Vizrt and an NCam plug-in. Sky is exploring the ability to show AR team formations using player avatars and displaying formations on the floor of the studio, appearing in front of the live action football pundits. Sky Sports head of football Gary Hughes says the AR set initially looked “very CGI” and “not very real” but that it has improved a lot since its inception. “With the amount of CGI and video games out there, people can easily tell what is real and what is not. If there is any mystique to it, and people are asking if it is real or not, then I think you’ve done the right thing with AR.”“ESPN has introduced AR to refresh the presentation of its long running sports discussion show.”

Upward Trajectory AR elements have improved quickly, moving from looking quite artificial to being photo realistic

Iberian Iterations

Spanish sports shows have taken to AR like a duck to water. Multiple shows have taken to using systems and designs from Lisbon’s wTVision, which is part of Spanish media group Mediapro. In a collaboration with Vàlencia Imagina Televisió and the TV channel À Punt, wTVision manages all virtual graphics for the live shows Tot Futbol and Tot Esport.

The project combines wTVision’s Studio CG and R³ Space Engine (real-time 3D graphics engine). Augmented Reality graphics are generated with camera tracking via Stype.

For Movistar+ shows like Noche de Champions, wTVision has created an AR ceiling with virtual video walls. Its Studio CG product controls all the graphics. For this project, wTVision uses three cameras tracked by Redspy with Viz Studio Manager and three Vizrt engines with the AR output covering the ceiling of the real set and the virtual fourth wall.

The same solution is being used for the show Viva La Liga, in a collaboration with La Liga TV International.

AR is also being used for analytical overlay during live football. Launched in August, wTVision’s, AR³ Football is able to generate AR graphics for analysis of offside lines and freekick distances from multiple camera angles. The technology allows a director to switch cameras. The system then auto-recalibrates the AR and it takes a couple of seconds to appear on air.

Realistic and customisable AR for broadcast has only just matured, but it is already delivering extraordinary results. It may not be long before AR content outshines the reality it’s augmenting.

This article originally appeared in the December 2018 issue of FEED magazine.]]>

Upward Trajectory AR elements have improved quickly, moving from looking quite artificial to being photo realistic

Iberian Iterations

Spanish sports shows have taken to AR like a duck to water. Multiple shows have taken to using systems and designs from Lisbon’s wTVision, which is part of Spanish media group Mediapro. In a collaboration with Vàlencia Imagina Televisió and the TV channel À Punt, wTVision manages all virtual graphics for the live shows Tot Futbol and Tot Esport.

The project combines wTVision’s Studio CG and R³ Space Engine (real-time 3D graphics engine). Augmented Reality graphics are generated with camera tracking via Stype.

For Movistar+ shows like Noche de Champions, wTVision has created an AR ceiling with virtual video walls. Its Studio CG product controls all the graphics. For this project, wTVision uses three cameras tracked by Redspy with Viz Studio Manager and three Vizrt engines with the AR output covering the ceiling of the real set and the virtual fourth wall.

The same solution is being used for the show Viva La Liga, in a collaboration with La Liga TV International.

AR is also being used for analytical overlay during live football. Launched in August, wTVision’s, AR³ Football is able to generate AR graphics for analysis of offside lines and freekick distances from multiple camera angles. The technology allows a director to switch cameras. The system then auto-recalibrates the AR and it takes a couple of seconds to appear on air.

Realistic and customisable AR for broadcast has only just matured, but it is already delivering extraordinary results. It may not be long before AR content outshines the reality it’s augmenting.

This article originally appeared in the December 2018 issue of FEED magazine.]]>