Eye tracking software | A new age for accessibility

Posted on Apr 12, 2021 by Neal Romanek

Ganzin technology is demonstrating how eye tracking software is the next frontier in device control, data collection and accessibility

Say goodbye to repetitive motion syndrome. Your entire work-day – and recreation time – will soon be controlled with a look. Eye-tracking technology is on the verge of becoming the next major interface in our digital lives, as well as a major source of data to be mined.

Introducing Ganzin Technology

Taiwan-based company Ganzin Technology was spun out of eye-tracking research at National Taiwan University. At CES 2019 it launched its Aurora tracker. The tiny tracker was designed to be easily integrated into all kinds of devices, particularly with a view to incorporate it into AR and VR devices. Aurora then won a CES Innovation Award in 2020.

Ganzin has worked hard to get Aurora into the sweet spot of a very small size, combined with low power consumption and affordability. The tracker can be easily integrated into partner technology such as VR goggles or lightweight AR glasses with incorporation of two bits of hardware: the EyeSensor and the Eye Processing Unit (EPU).

Ganzin sees a world in which high-quality augmented reality will be a common, hands-free interface with the digital world, mostly through near-eye displays like AR glasses. The company is also counting on 5G networks to be a key driver of VR and AR technologies.

EYE ON THE FUTURE: Ganzin Technology was spawned from eye-tracking research at National Taiwan University

“Eye tracking is a must-have component for AR and VR,” says Ganzin product manager Martin Lin. “Gaze is the only interface where you can point to a virtual object and a real object at the same time.”

Surprisingly, instruments for recording and tracking the human gaze are over a century old.

One of the earliest was a kind of contact lens connected to an aluminium pointer, developed by American psychologist Edmund Huey for studying how a person’s eye moves when reading.

Fortunately, eye-tracking tech has become a bit less intrusive. One of the recently used principal methods involves tracking reflections of near-infrared light in the cornea. But this method is too complex to lend itself well to everyday, consumer use.

“They usually put a lot of components in front of your eyes,” explains Lin. “It could be more than 10 LED sensors. This means a high barrier for the device makers and they’re not fit for AR technologies or smart glasses.”

Ganzin’s “micro eye-tracking module” is small enough to fit in the bridge of regular glasses. In addition to having an attractive form factor, the eye-tracking sensors, which analyse the position and direction of each eye, have been calibrated using machine learning based on a massive database of human eye images.

Qualcomm

The company has begun to work with Qualcomm and integrate with the Qualcomm Snapdragon XR2 AR/VR technology, which is used by most major AR and VR devices. They have also announced a new eye-tracking software package based on the XR2 platform. As a result, hardware developers using the Qualcomm platform will be able to easily integrate Ganzin’s eye tracking into their products.

When you want to pick up a cup, your gaze goes to the cup first before you pick it up

Ganzin’s eye tracking also calculates eye convergence. Simpler types of eye tracking rely on a fixed distance between the user and subject matter to operate at their best. But for tracking to work properly in an AR world, it’s essential to pinpoint where in 3D space a user’s gaze is aimed. This is how Iron Man is able to clock a bad guy 100 meters ahead, and immediately activate the missile controls floating four inches in front of his face. This also opens up the possibilities for true depth of field and variable focus in VR content, more closely mimicking real human eyesight in virtual settings.

There are huge opportunies in gaming, of course. Ganzin even developed its own game demo, The Eyes Of Medusa, in which the user turns enemies into stone with the power of her petrifying gaze.

“It’s very easy to use as well. It’s quite natural, since you’re using your gaze all the time. When you want to pick up a cup, for example, your gaze goes to the cup first before you pick it up,” says Lin.

Eye tracking software: Sol glasses

The company has also developed its own glasses, called Sol, which are accompanied by a development kit for commercial applications. As with any input technology, while users are employing it for control, they are also producing data. Eye-tracking technology can reveal a huge amount about the user. What they are interested in can perhaps be determined before the person themselves is even aware of it. Pupil size can reveal arousal or interest. Drivers or workers wearing AR glasses incorporating eye tracking could be notified when fatigue is setting in or when concentration is slipping.

“They say the eyes are the window of the soul – I think that is quite true. Through someone’s eyes, we can know a lot about people,” explains Lin.

VISIONARY SPECTACLE Ganzin Technology has produced an eye-tracking glasses system called Sol

Eyeware

Eyeware, headquartered in Martigny, Switzerland, is another eye-tracking software start-up launched in 2016, also as a research spin-off – in this case from the AI specialist Idiap Research Institute and Lausanne-based university EPFL.

The company has been developing eye-tracking solutions for a variety of AI-driven applications, from laptops and smartphones to cars and simulators, and for potential use in retail. Using eye tracking in flight simulators and for pilot training is another space being watched closely.

“The original idea was to try to democratise eye tracking on consumer-grade devices,” says Eyeware account manager Chase Anderson. “The historical problem with eye tracking in all domains is that it’s been quite expensive and finicky. We wondered, can we do this with everyday, consumer-grade 3D cameras? And what new opportunities would that open up for multiple industries?”

Eye tracking can give you AN insight into what is most attractive to people

Outside of industrial deployment, one of the most consistent uses of Eyeware technology is in research.

“One project was measuring attention based on who was speaking. If you had men and women sitting around a table, how much attention goes to each of them. Does an older man speaking get more attention versus a younger woman? What affects that? It’s for anyone who wants to study attention and attention behaviour.”

The company has recently ventured into B2C applications, with eye-tracking solutions tailored specifically for gamers. Q2 of this year will see a public beta launch of their new product Eyeware Beam, an iPhone app for the gaming market.

“We’re currently in private beta and focused on two main functionalities,” explains Eyeware product marketing manager Sabrina Herlo. “One is using your iPhone as a head-tracking device that will enable you to move your point of view, tracking your head position, for example in Microsoft Flight Simulator. The other is a basic functionality, a first for being enabled with an iPhone, of making the bubble of a streamer’s eye gaze visible onscreen and shareable with their audience.”

Esports

Applications for esports are also being investigated. A field eye-tracking is already being employed for coaching and analysing gameplay.

The Eyeware software hinges on the capture of the head pose and eye features in a 3D environment. These depth images can be captured using inexpensive 3D cameras, or even an iPhone. The technology is camera agnostic. Once the image data of a user’s gaze and head pose is captured, Eyeware algorithms can determine with confidence how and where they are focusing.

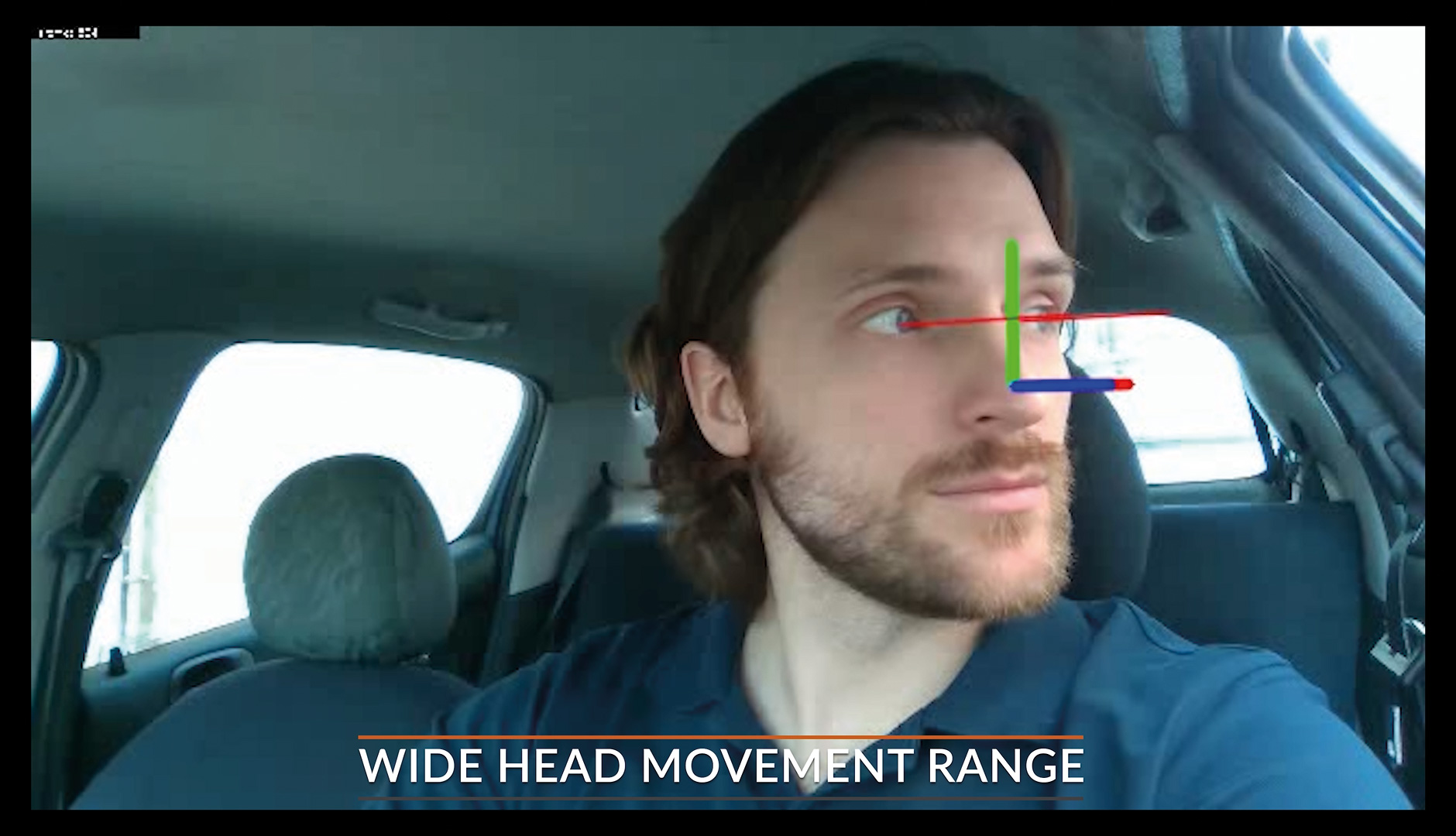

DRIVING FORCE Eyeware uses 3D modelling and AI to create eye-tracking applications for everything from in-vehicle control to games

The data available in eye tracking is potentially very valuable. And, as Anderson explains, the technology could become an integral part of customer sales.

“There are multiple steps in a retail sales conversion process. You can tell what product the customer walked out with by looking at sales numbers, but there’s a lot of granularity along that decision-making path. Eye tracking can give you insight into what is most attractive to people – are they just glancing at an item, or are they really considering for a few seconds before they move on?”

Combining data anonymised at the sensor level that conforms with GDPR rules, with powerful AI, has potential to put a lot of power into the hands of brands and retailers. The amount of useful data that can be harvested by recording looks will make mouse and click-data look weak in comparison.

“This type of technology is still very young in the market,” adds Herlo. “I think everyone is trying to figure out what could be done next with it.”

Eye tracking software for disability

For some, the benefits of eye-tracking tech aren’t just game-changing, they’re life-changing. UK charity SpecialEffect, launched by Dr Mick Donegan in 2007, works to give people with physical disabilities access to video games. The charity serves enthusiasts of all ages, including accident victims, injured veterans, stroke patients and people with congenital or progressive conditions.

Donegan began as a teacher and assistant technology specialist helping significantly disabled young people in schools. He was investigating the potential for eye-tracking technology as early as 2004 when working with COGAIN, a European organisation studying gaze-controlled solutions for people with complex disabilities. He started SpecialEffect as a way of meeting the desire expressed by clients to access and play video games.

SpecialEffect offers a variety of services and technological interventions, and eye-tracking technology has been a boon for some. One especially useful tool for gamers has been the low-cost Tobii 4c – used by the pros – for tracking eye movement and play analysis. But tech interventions can also include everything from remapping existing set-ups to head-controlled input devices or customised controllers.

“Eye-gaze technology isn’t the only method for game and interface control,” says SpecialEffect communications officer Mark Saville. “It’s often part of a cluster of tools, used in conjuction with voice or body movement. There is no one-size-fits-all set-up.”

We have users with cerebral palsy who can take advantage of the technology

Saville gives the example of one SpecialEffect client using eye-gaze control who was still restricted by only possessing vertical eye movement. Each gaming set-up needs to be integrated according to user requirement. That in-home consultation and calibration is a big part of what SpecialEffect does.

“The first time I saw eye-gaze technology was in the early 2000s,” remembers Saville. “With those set-ups you had to keep your head perfectly still. Now, we have users with, say, cerebral palsy, who may have involuntary head movement, but perfect control of their eyes, who can take advantage of the technology.”

Minecraft

The charity recently released version 2 of EyeMine for PCs. It is a gaze-controlled, on-screen interface for everyone’s favourite block-based sandbox game, Minecraft. The free software is optimised for eye-tracking technology, but can also be used with inputs as basic as a computer mouse.

LONG-DISTANCE GOAL Rob Camm was left a tetraplegic after a car crash and began using eye-gaze tech from his hospital bed. He now does wheelchair half marathons and is a major fundraiser for the SpecialEffect charity

As eye-tracking technology improves, so does the empowerment of disabled users, and access to video games can be life-changing. People with severely restricted movement can suddenly run, drive a car, play basketball – and engage with people around the world.

Saville recalls one SpecialEffect client with muscular dystrophy who was finally able to start gaming online – and winning.

“He said of his opponents: ‘You can hear them throwing the controller against the wall when they lose.’”

This article first featured in the Spring 2021 issue of FEED magazine.