Feed goes to Siggraph

We visit one of the best gatherings for innovation around graphics and image creation – and find it’s less a trade show and more a family reunion

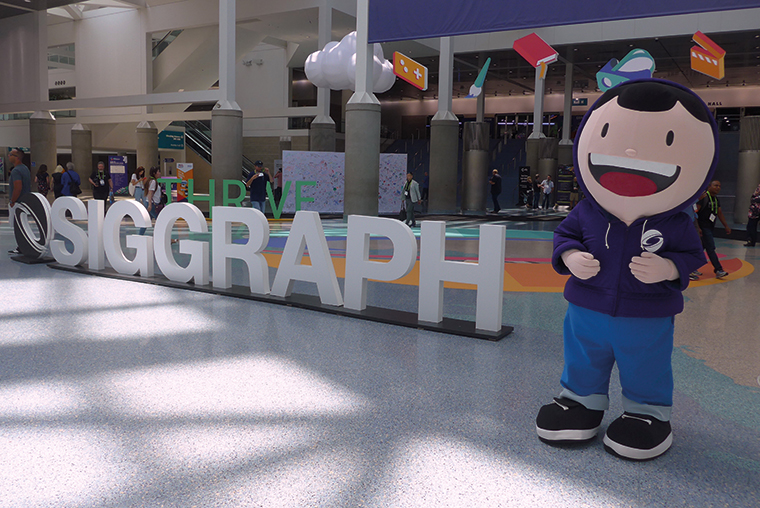

SIGGRAPH is an annual conference celebrating computer graphics as both art and industry. The Los Angeles Convention Center hosted this year’s conference, while additional events and programming took place at the nearby LA Live entertainment complex and JW Marriott hotel.

The SIGGRAPH annual conference celebrates computer graphics as both art and industry

The event has a great energy, like that very first week at university – lots to see and learn, but also a great sense of community. It is an over-used term, often lacking in sincerity, but SIGGRAPH truly feels like a ‘community’. In truth, it is a collection of interconnected communities – CG artists, industry professionals, researchers, educators, scholars and students – which feed on and nourish one another, and together they thrive. And appropriately, ‘Thrive together’ was the theme of this year’s conference.

Mikki Rose, this year’s conference chair, said: “There is no question that the fantastic people who are participating at the conference in LA are thriving when it comes to their creativity and talent. There is something unique about learning new methods, techniques and technologies among your peers (rather than sitting alone in a cubicle). The relationships our attendees build at the conference are akin to family, which is why I want SIGGRAPH 2019 to embrace that community.”

There is something unique about learning new methods, techniques and technologies

In a single day, an attendee can sit in on technical papers covering Deep Reflectance Fields – High-Quality Facial Reflectance Field Inference From Color Gradient Illumination, or Finding Hexahedrizations for Small Quadrangulations of the Sphere, a three-hour course asking the question Are We Done With Ray Tracing? (not yet), an eight-hour workshop on Computer Graphics for Autonomous Vehicles, or a panel on The Ethical and Privacy Implications of Mixed Reality, along with exhibitor sessions, business symposia and an educators forum. If an attendee gets lost trying to get from one session to another, there are student volunteers nearby to help.

The conference also offers interactivity, and not just in the VR/AR world. Birds of a Feather sessions are round tables organised by attendees on topics of interest today and for the future, such as the session on Sharing Ideas in Teaching 3D Animation, where educators and professionals interacted on the subject of teaching students, from school students to interns learning in a professional setting.

3D graphics, game engines and live events crossed over in a talk about Childish Gambino’s Pharos live concert in New Zealand. For the show, Keith Miller of Weta Digital and Alejandro Crawford of 2n Design, along with their teams, collaborated to produce a 3D-animated projection inside a 160ft-wide temporary inflatable dome. The venue wasn’t built until just before the show date, so in the absence of the actual dome, the team built a real-time 3D VR model of the dome to create the animation. The graphics were synched to the musical performance, but the visuals could be manipulated by a VJ (maybe ‘3DJ‘ would be more accurate?) with the Unreal Engine via a MIDI controller, to deal with song length and breaks between songs, which could vary from rehearsal to showtime.

The full experience

The SIGGRAPH exhibit hall was split into two areas. The larger was the traditional trade show booths, with hardware and software manufacturers and service providers, showing the latest and greatest and fastest the industry has to offer. The smaller area was no less impressive. The VR Theatre, the Experience Pavilion (which also contained the Immersive Pavilion), the Geek Bar and the Posters presentation area had something for everyone.

The VR Theatre was expanded to 50 seats this year and ran in one-hour blocks, featuring four screenings. The two interactive pieces were an animated Doctor Who short from the BBC, and Bonfire from Baobab Studios, where users encounter an alien life form with only their wits and robotic sidekick to guide them. The lean-back attractions were Kaiju Confidential and A Kite’s Tale. In the former, from easyAction and ShadowMachine, viewers could experience a giant monster attack on a Japanese city. The latter, from Disney Animation, was a soaring flight through the clouds following a majestic dragon kite being annoyed by a small puppy kite.

The most low-tech area of this very high-tech event is the posters gallery. Posters submitted by researchers present concise descriptions of research, including methodology, experiments and conclusions.

The Geek Bar provided attendees a place to get off their feet and relax on their choice of bean bags, couches or bar stools to watch a screen filled with feeds from the ongoing sessions. Inside the Immersive Pavilion, an array of presenters showed off entertaining and educational games, VR experiences, adaptive technologies, emerging technology, 3D printing and a creative studio with art supplies.

As the Pavilion opened each morning, a line of people streamed in for the privilege of being 3D scanned and having a 3D-printed bust made of themselves. Only about ten people a day were lucky and fast enough to make it in time for this experience. In the future, everyone will be turned into their own free swag.

We understand that the relationships our attendees build at the conference are akin to family

Innovating with 3D printing, David Shorey of Shorey Designs demonstrated how to print three-dimensional objects on fabric. The printing layers fuse through porous fabric, creating 3D objects that are able to move and bend, on a fabric base. Applications in costume design, fashion and cosplay are obvious and intriguing.

Start your engines

As game developers released once proprietary game engines into the wild, users ran with them in all directions – and not just down the path to making more games. New applications include architecture, engineering, live events and education. The possibilities seem endless for anyone who can utilise visual, virtual and interactive media. The two engines currently getting the most attention from producers are Unreal Engine and Unity.

Unreal Engine began its life in 1998 as the platform for the Epic Games shooter, Unreal. Unity was created by Unity Technologies as a game engine exclusively for Mac OSX. Unreal Engine’s use in traditional linear entertainment is relatively recent. But in 2015, filmmaker Neill Blomkamp created the short film Adam with Unity, after which the company partnered with the filmmaker to create two more episodes.

The biggest selling point for using game engines in film production is their ability to render virtually final pixel graphics in real time, allowing for final creative decisions to be made at any point during production. Once the environments and characters are brought into the engine, scenes can be played out and shot with any camera from any angle, and lighting can be changed on the fly. Traditional storyboarding gives way to 3D previsualisation. Since the previs is essentially happening on set, creative decisions usually made during production can now be made during what was a preproduction process. Editing, FX and compositing are occurring at the same time as the previs, so producers now have all stages of production happening simultaneously in-engine.

Fix it in prep

At SIGGRAPH’s Unreal Engine Users Group, Epic Games’ executives, partners and users praised the Unreal Engine as a production tool.

Sam Nicholson, CEO and founder of Stargate Studios, explained: “A lot of shows we do are experimenting with how to move these things into principal photography. What can you do, and what can’t you do, by taking the power of an entire post-production visual effects facility and moving it on set? It requires a mindset change, because we’re saying ‘fix it in prep’ rather than, ‘fix it in post.’”

Producing in the virtual space saves time, and therefore money, but it also brings together departments that have, traditionally, never worked together. Director and producer Jon Favreau used Unreal Engine for the remake of The Lion King and is using it on The Mandalorian.

At the Unreal Users Group gathering, Favreau said: “We get to make the movie essentially in VR, send those dailies to the editor. We have a cut of the film that serves the purpose that previs would have. You’re using it in The Lion King in lieu of layout, and in the case of The Mandalorian or other live-action productions, in lieu of previs, but it allows for collaboration.”

Epic also announced that Unreal Engine 4.23 is available now in preview.

Adaptation and access

A new programme this year was a focus on adaptive technology. The purpose of this programme was to “highlight content throughout our conference that helps people in their everyday lives, particularly those with alternate ability levels,” Mikki Rose explained. “Throughout the years, we’ve seen research in the realm of computer graphics and interactive techniques strike a chord in this area, so this year we actively sought it!”

SIGGRAPH not only has a trade show with the latest innovations, but also a VR Theatre and Experience Pavilion

There were conference sessions, immersive demonstrations and exhibitions that focused on adaptive technologies. In the Immersive Pavilion, there were a few interesting examples of adaptive technology. The first was Autism XR, a mobile, web-based, augmented reality ‘game’, for lack of a better word, which aimed to help 14 to 18 year olds with autism improve their social communication skills. The project was built by high school students in 3D animation and game design at Kent Career Tech Center in Grand Rapids, Michigan. Users interact with a friendly 3D-animated character to learn greeting skills. The project utilises facial recognition, spatial detection, voice recognition, eye-contact monitoring, expression and emotional recognition to determine a user’s level of interaction. Autism XR is currently only available on Android devices, with plans to branch out to more mobile platforms.

Being Henry is an immersive VR documentary wherein the user experiences life through the eyes of Henry Evans, who has been paralysed by a stroke. Henry can no longer speak and has very limited movement in his neck and one hand. He is able to connect to the world with technology, which allows him to speak, and via interactive robots, which go out into the world as his eyes and ears.

3D-printed bust available at SIGGRAPH

Unity community

Moving out into the rest of the exhibit hall, you see that this is one place where size still matters, at least when it comes to booths. Unreal Engine and Unity seemed to be the two big dogs at the show, though based on market cap, Microsoft and Amazon Web Services are probably the biggest dogs in the exhibit hall. Unreal Engine and Unity are leading the pack in the convergence of traditional, linear storytelling, such as movies and television, with the technology of game development. The same tools used to build games for mobile phones, consoles and computers are also being used to make television series and feature films.

Someone experiencing VR in the immersive Pavilion

Director Jon Favreau used Unreal Engine to produce Disney’s remake of The Lion King. At the Unreal Engine Users Group event at the Orpheum Theatre, Favreau talked about the collaborative opportunities of his experience. “We built our sets, uploaded them to VR and scouted around right in VR. We had this iterative process where we’d go to the location – just like you would on a live-action shoot – and discuss camera angles, pick lenses. Andy [Jones] did the animation. So we pre-animated everything and we shot it as though we were looking at the lions.”

Disney Studios utilised the Unity engine for a set of three shorts titled Baymax Dreams, and French animation studio Miam! used Unity to create 52 11-minute episodes of the show Edmond et Lucy.

Sci-fi film director Neill Blomkamp completely embraces Unity as a production tool. “I love the idea of environments you can explore at 60fps”, he says. “Real-time simulation, real-time lighting. Unity is what real-time virtual cinema is.”

Down the way from the Unreal booth, hardware and software company Blackmagic Design was showing off DaVinci Resolve 16. This all-in-one suite of post-production tools recently added a full version of the company’s Fusion VFX software. The DaVinci Neural Engine adds features like Speed Warp, facial recognition and automatic colour balancing and colour matching. Facial recognition via the Neural Engine can automate the organisation of clips into bins based on the faces it sees in each clip. The final version of the software should be out this year.

However, this hardware and software won’t produce great results without characters and performances that audiences enjoy. Capture hardware and software was the most ubiquitous tech on the show floor. Performance-capture hardware, facial-motion capture cameras and software, mobile mocap studios, lidar, and photogrammetry hardware and software for capturing environments filled the exhibit hall.

SIGGRAPH is an opportunity like no other and hard to summarise. A collection of disparate talents and technologies creates a community convening under one roof. There is far more to see and do and learn for any one person. Coming together in the SIGGRAPH community, whether artist or architect, student or studio executive, researcher or just plain searcher, everyone can find an area of interest to engage them, or an area of ignorance they can learn about.

SIGGRAPH Asia 2019 will be held in Brisbane, Australia in November. SIGGRAPH 2020 will convene, for the first time, in Washington, DC in September next year.

This article originally appeared in the September 2019 issue of FEED magazine