How mixed reality can create immersive esports content

Posted on Mar 25, 2020 by Alex Fice

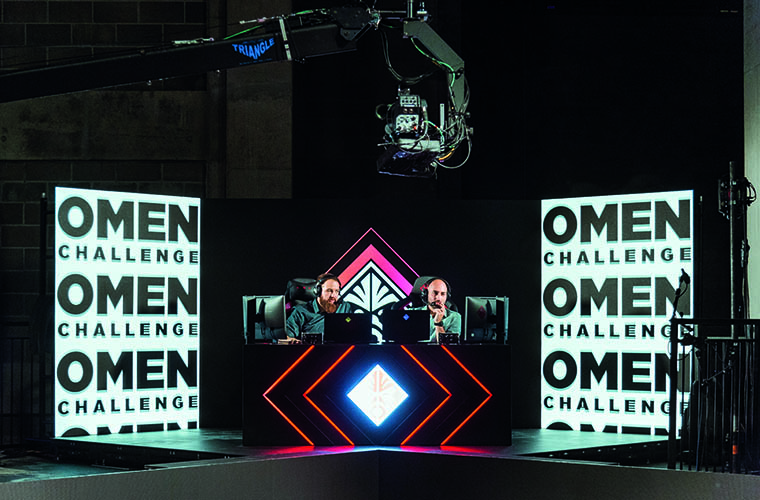

New mixed reality technologies turned the HP Omen Challenge 2019 into the ultimate immersive experience for CS:GO fans

There’s always innovation in esports. It challenges expectations of what competitive sports can and should be. It judges athletes on how good they are instead of how well backed they are and, as an industry, is always evolving and embracing new technology.

Last year’s Omen Challenge by HP saw eight of the world’s best Counter-Strike: Global Offensive (CS:GO) players battle it out for a $50,000 prize. Six were pro gamers, but two were quality amateurs who had earned their spots at the tournament through a series of qualifying rounds. They were trained by coaches in order to compete with their heroes in one-on-one bouts and death player matches, all fought with a custom-built arena. Extra money prizes were awarded for bounties such as knife kills and first blood.

They wanted to do something in-camera with LED video walls and mixed reality tricks

The 2019 Omen Challenge was also the first time mixed reality was used in an esports tournament; where real world players were able to enter the game map and interact with their in-game characters.

Program design

Scott Miller, a technical producer working in partnership with Pixel Artworks, was instrumental in bringing the design of the mixed reality program together.

The brief from HP was to “create an immersive experience that would look good to both the live and broadcast audiences,” he explains. “They wanted to do something in-camera with big LED video walls and mixed reality tricks.”

Miller designed a bespoke pipeline toolset using Disguise, Notch and TouchDesigner technologies. Disguise’s super speedy gx 2c and gx 1 media servers were used to power the real time mixed-reality content that had been made in Notch, with data coming in from the game to power the info graphics.

Good omens: Mixed reality was used to allow players to ‘step into’ the game world and replay the highlights

The final output was then carried out in Disguise’s Extended Reality (xR) workflows and sent to the LED video walls that made up the game arena. The LED video walls worked to cleverly “transport” the shoutcasters into the game world, which could be rendered by the game engine to place them directly into the game map.

“The game engine was controlled by six TouchDesigner machines, written with custom code, to power the image rendering and manage the game data,” Miller notes.

Mixed reality was furthermore used to allow players and interviewers to “step into” the game world and replay the highlights.

Using a Steadicam and rendering the game engine into both the LED and virtual worlds, the player could see themselves in the game and talk about their best moves.

“We worked closely with the game map designers to develop a pipeline that would make the real-world set, game data and content align with the digital equivalent. The outcome was a virtual arena that, to live and broadcast audiences, would seem as though a real person was in the game world,” Miller explains.

Viewers turning into the Twitch live stream from home were able to see the in-game footage, combined with the live commentary from the CS:GO shoutcasters. Plus, regular studio cutaways meant that fans were also able to see their idols celebrating their kills in the flesh.

All the feeds

Streaming services company Flux Broadcast handled the technical infrastructure for the broadcast, working with Miller and Pixel Artworks to integrate mixed reality into the broadcast infrastructure – even housing the team at its headquarters in the lead-up to the event so their communication and planning was precise.

Ross Mason, the company’s director, explains the brief, which was “to make the most immersive sports esports tournament ever”.

Overall, Flux Broadcast distributed an incredible 144 feeds throughout the broadcasting structure and to various vendors. Mason divulges that “one of the biggest hurdles of an esports production is the sheer amount of feeds that you have to work with.”

“For the 2019 Omen Challenge, meticulous planning was required and we had to allocate time towards camera rehearsals with the shoutcasters and players both in and out of the mixed-reality environment,” he adds.

The roles of game observers, esports referees and shoutcasters would have been completely alien to broadcasters a couple of years ago. But understanding their specific needs has opened up opportunities for companies like Flux Broadcast who act as a link between new tech and traditional broadcasting techniques.

We allocated time to camera rehearsals with the shoutcasters and players in and out of mixed reality

“Being based in a virtual world, augmented reality and mixed reality broadcast technology feels like it has an incredibly high potential for esports, especially when used to blur the lines between the virtual world of the game and the physical world of the players.

“Esports is ultimately about the gameplay,” continues Mason. “So every new technique has to work to support that. Old-school programming and scripting can’t be written off, and close attention to the balance of gameplay versus non-gameplay is paramount. It’s just as important to give time to segments that create narrative and provide an emotional element to the tournament as it is to use new technology, I believe we achieved that harmony for the broadcast of the 2019 Omen Challenge.”

The tournament raked up over 100,000 views on Twitch, with highlights from the show gathering tens of thousands of views across multiple platforms, including Reddit, Facebook Live and YouTube. The final, in which s1mple defeated NBK, has been streamed over half a million times.

HP wanted to make this experience work for both the live and broadcast audiences

Coming together: Getting the right balance of new tech and traditional broadcast can make for a great audience experience

Pros of LEDs

“There have been esports tournaments in the past that have used augmented reality to simulate players in-game characters running around in a game using a green screen and a headset, but it’s the first time an esports tournament has succeeded in being able to take real world players and put them into a game without using those things,” Miller says.

No to green: This was the first time an esports tournament put real-world players into a game without a green screen

“Can you imagine if we had done this with a green screen? The players wouldn’t have been able to interact with the replays in the same way; to point to themselves and discuss why they ducked down here or shot that guy over there, for example. Yes, it would have worked for the broadcast audience, but the live audience would have had to stare at a green screen the whole time.”

He adds: “And HP wanted to make this experience work for both the live and broadcast audiences.”

The success of the project has meant that HP is eager now to tour the concept around the world and Miller, who was key to the design of the whole thing has been approached by other broadcasters to reproduce the idea. He teases: “Just be sure to tune into the Summer Olympics in Tokyo later this year!”

This article first appeared in the March 2020 issue of FEED magazine.