Game engines: Game, set, action!

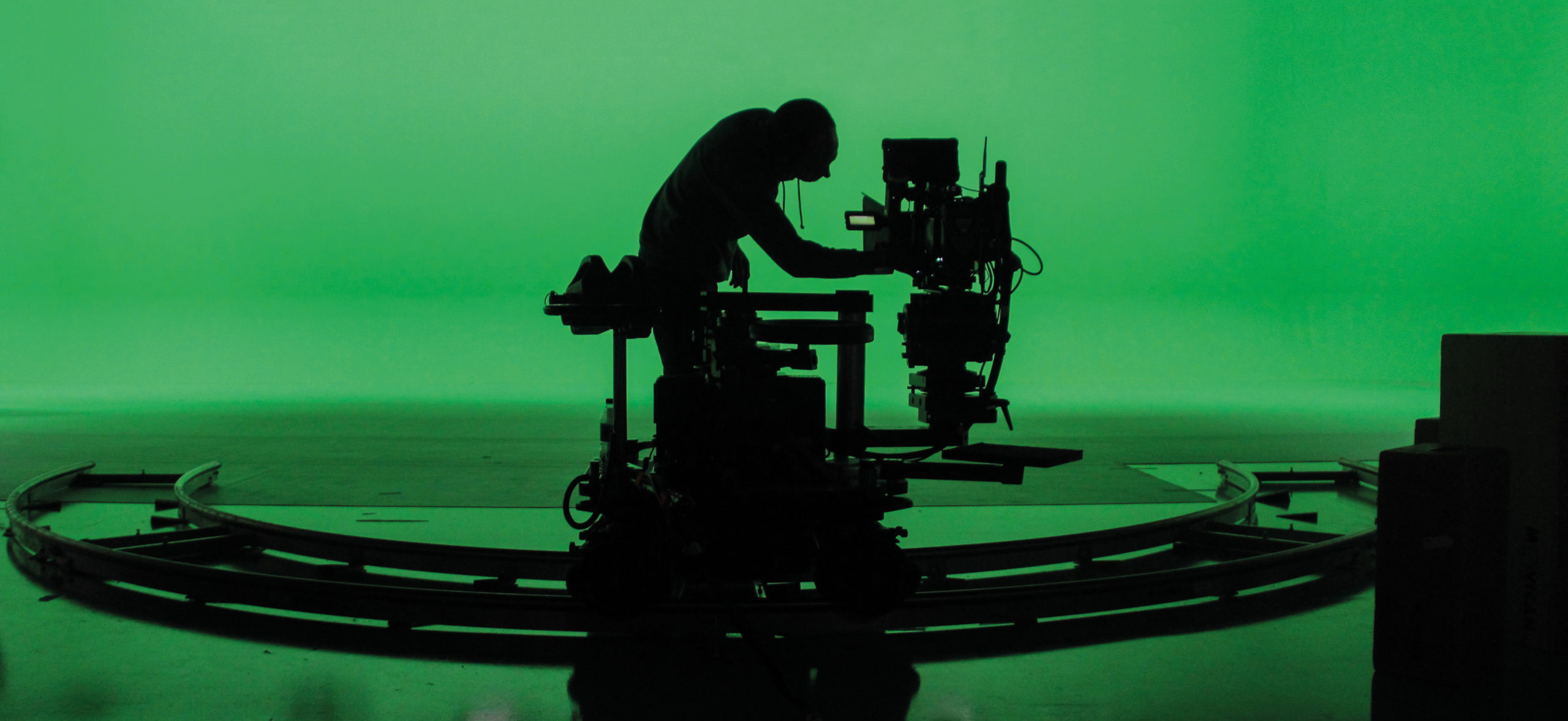

Game engines are bringing entire galaxies down to the size of a green screen studio and a desktop

No longer confined to consoles, game engines now provide the basis for most broadcast virtual set systems and are tearing down barriers to virtual filmmaking and photorealistic mixed reality.

“Game engine technology is one of the driving forces that will make mixed-reality experiences ubiquitous in media production, and eventually in our daily lives in general,” says Marcus Blom Brodersen, CEO of mixed-reality software company The Future Group. “They provide the tools needed to bridge the gap between our digital and physical lives by allowing us to turn enormous amounts of data into beautiful and intuitive images and audio in real time.”

“It’s been interesting to see the blurring of the lines between traditional production and game development,” says John Canning, executive producer of new media and experiential at Digital Domain. “Brands want VFX in new places and on smaller and smaller screens, which means Digital Domain, as a ‘traditional’ VFX company, is getting more requests for real-time solutions. Game engines are an essential piece of this.”

It’s been interesting to see the blurring of the lines between traditional production and game development

There are many game engines, but two big players will be familiar to the broadcast and entertainment sector. Unity – from Unity Technologies – powers games on mobile, console and desktop PC, as well as AR and VR content. Unreal Engine (UE) from Epic Games is not only behind such games as Fortnite, but has also seen a lot of deployment in virtual studios and augmented reality.

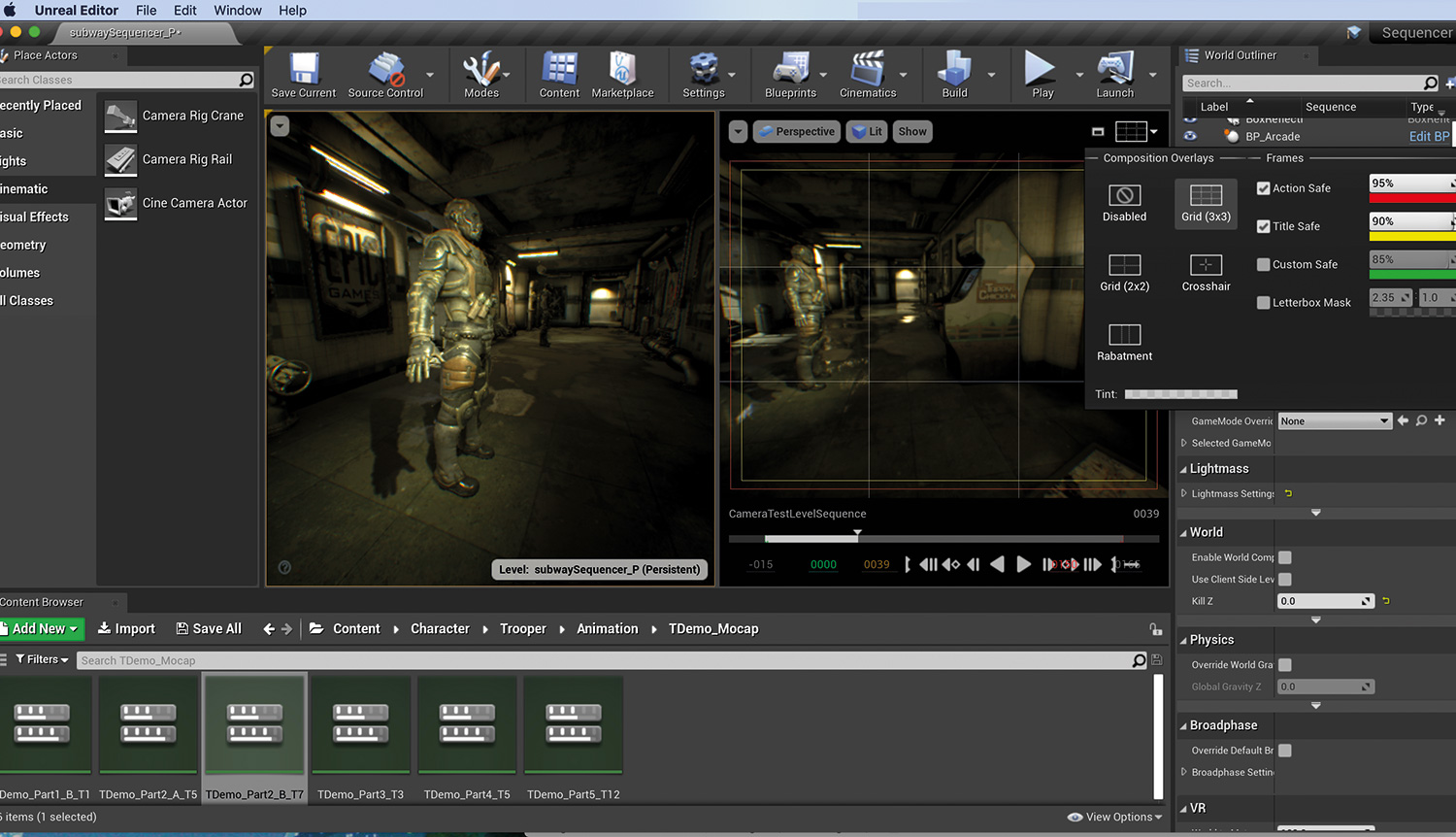

“At Digital Domain, being able to leverage Epic’s UE4 in our production pipeline creates serious efficiencies, as we can apply it to more processes every year,” explains Canning. “From creating virtual scouting tools such as photogrammetry and lidar information of a physical site, to leveraging that content into previs, production (such as LED/in-camera visuals) and, later, post. The game engine is becoming more of a standard for our artists, replacing some traditional VFX tools. So much so, we’ve been able to produce full game cinematics in-engine.”

Some assembly required

However, before you attempt to just fire up UE or Unity and create Avengers: Endgame in your bedroom, think again.

“It’s not like you can bring any old crap in and then Unreal makes it look sexy,” says Dan May, co-owner and VFX art director at Painting Practice, an award-winning design studio that has been using game engines for previs and production planning in series like Black Mirror and His Dark Materials. “You can make assets, but you’re still modelling, rigging and animating all your content in a third-party application like Maya, 3ds Max, Blender or Cinema 4D – you bring all that in, and then you basically shade it and light it.”

The Eurovision Song Contest 2019 used The Future Group’s virtual studio and augmented reality platform, Pixotope, for live broadcast graphics during the competition

“Game engines are, as the name implies, toolboxes to build games. They are not turnkey solutions for creating media productions,” explains Brodersen.

“Game engines themselves are fairly cheap,” adds Canning. “It’s the talent and infrastructure that are expensive. If game engines can become accessible to a point where you don’t need a coder, you’ll see a wider user base. But even then, there will probably be levels to real-time content creators. Right now, a lot of people can buy Maya, but does that mean everyone is doing film-level VFX?”

If you’ve got Blender and Unreal, you’ve got an amazing free suite right there

A virtual galaxy far, far away

Pioneered over a decade ago on films like Avatar and Hugo, virtual production has been revolutionised by bringing game engines into the mix. Dispensing with the sequential pipeline of the past, CG cameras, rendered lighting and set assets can be moved in real time on a virtual stage, and final-frame animations rendered out.

“Moving a column in a palace in Unreal Engine is a drag and drop,” says Painting Practice’s Dan May. “Moving a real column would involve thousands of pounds, and lots of people shouting at you.”

Tools like multi-user editing in Unreal Engine allow potentially dozens of operators to work together on-set during a live shoot. An evolution of this approach was employed on Disney’s The Mandalorian where UE’s nDisplay multiple-display technology powered the content rendered out on the ‘Volume’ – the name given the show’s curved-screen virtual set. The LED screens blended with real-time parallax on the real-world sets, set extensions and VFX during production, and even lit the set.

The Disney TV Animation series Baymax Dreams made use of tools in Unity such as its multitrack sequencer Timeline, the Cinemachine suite of smart cameras, Post-Processing Stack to do layout, lighting and compositing, and Unity’s High Definition Render Pipeline (HDRP). These tools are not just being used for previs – the actual rendered frames from Unity are what appear in the final show.

THE BEAUTIFUL GAME Mo-Sys and Epic Games developed an Unreal Engine interface to integrate photorealistic augmented reality into live production with almost no latency. The tracking system can be used on any camera, including ultra-long box lenses for sports

In combination with game engines, consumer items like iPads, mobile phones and VR headsets are all now being used to control interactive content and capture animation data, and also return power to the director. For example, instead of keyframing camera moves, you can perform virtual cinematography in Unreal or Unity from an iPad using the DragonFly app from Glassbox.

Live production

Marcus Blom Brodersen says anyone doing professional media production can get started working with game engines now. “If you are looking to create a virtual set or studio, you will need a green or blue screen and a camera capable of delivering a video signal over SDI,” he explains. “To be able to have a dynamic camera for mixed reality, including zoom and focus shifts, you need a camera-tracking system. These come with different features and price points, depending on the type of production you are doing. In the last few months, several high-end remote PTZ cameras have been launched in the market that deliver both video feed and camera tracking in one package, lowering the threshold for certain types of productions.”

Moving a column in a palace in Unreal engine is a drag and drop

For recorded and live experiences, tracking systems from Mo-Sys (VP plug-ins and StarTracker Studio) and Ncam Technologies (Ncam Reality and Ncam AR Suite) plug straight into the Unreal Engine to drive real-time rendered content.

The powerful Live Link feature in Unreal Engine allows it to consume animation data from external sources. As well as being used to capturing facial animation at low cost via phone cameras, this can enable real-time performance

in a simulated environment, or the complete replacement of the performer with a digital double.

The Future Group’s own commercial application, Pixotope, is built for on-air use and live events, enabling rapid design and deployment of virtual, augmented or mixed-reality content. It uses Unreal Engine to produce photorealistic rendering in real time, along with the ability to make last-minute changes while in live mode.

“In addition to all the broadcast features that are not part of a game engine: ndustry-standard SDI video input/output support, genlock/synchronisation, time code support, camera tracking parsing and real-time chroma keyer,” adds Brodersen. “Pixotope also has proprietary technology for solving the compositing of graphics and video in a non-destructive and highly performant manner.”

Computer software company Disguise is also moving into this space, trialling integration with Unreal Engine in its latest systems, setting the stage for immersive live visuals from its legions of users.

The essential component in integrating game engines into live mixed-reality experiences is an effective control

system. Experimental support in the UE 4.25 release allows connection from the Unreal Engine to external controllers and devices that use the DMX protocol. With bidirectional communication and interaction, this means creatives will be able control stage shows and lighting fixtures from Unreal and previs the show in a virtual environment during the design phase.

GAME THEORY Unreal Engine offers a specialised Cinematic Viewport, which gives users the ability to preview cinematics in real time

Gearing up

Many content creation tools are low cost – Blender is free – while sites like Epic Marketplace, KitBash3D and TurboSquid offer a host of free and low-cost models, assets and environments.

“If you were starting out a studio and just wanted to do work, if you’ve got Blender and Unreal, you’ve got an amazing free suite right there,” says Dan May.

After using it during the production of His Dark Materials, Painting Practice released the free Plan V visualisation software. Capable of multi-editor control, it allows users to experiment in real time with different lenses, cameras, animations, lighting and more without extensive knowledge of 3D modelling software.

It’s going to be even easier to create amazing content as time goes on

As well as recent link-ups with Arri, Mo-Sys and Ncam to support commercial virtual productions, On-Set Facilities (OSF) offers StormCloud, a VPN that allows production crews and talent to come together on virtual sets that are located in the cloud. OSF also recently joined the SRT Alliance to employ SRT, the open-source protocol to transport low-latency timecoded video from any location into Unreal Engine.

“We are approaching a time where the interactive and immersive capabilities of the game world will merge with the fidelity of the video/pre-rendered world delivered through thin clients, no matter where we are,” says Canning. “The combination of edge computing, 5G connectivity and real-time engines will make for a powerful and creative ecosystem.” And all this virtual production technology might just become indispensable in the new era of social distancing and remote production.

“With powerful tools and software in the cloud, and an enhanced ability for artists and technicians to collaborate around the world, it’s going to be even easier to create amazing content as time goes on,” concludes Canning.

This article first featured in the September 2020 issue of FEED magazine.

FEED has gone quarterly! Find out more here.