Youtube community captions removal: Is deaf accessibility lost?

Posted on May 24, 2021 by Neal Romanek

Last summer, YouTube cancelled its community captioning feature. The platform’s community contributions option allowed people posting videos to invite fellow YouTubers to submit video subtitles and closed captions, as well as titles and descriptions. Community contributions also meant that captions and subtitles could be submitted in multiple languages. These could then be reviewed, edited and ultimately published by the channel owner.

YouTube Community Caption cancelling

YouTube still allows users to insert their own captions or use the platform’s AI-powered automatic captioning service, or other third-party tools, such as 3Play Media, Amara, cielo24 and Rev.

YouTube explained the removal of community captioning with: “This feature was rarely used and had problems with spam/abuse so we’re removing them to focus on other creator tools.”

But deaf and hearing-impaired creators were nonplussed, accessing subtitles being so important to their means to create and consume content. Hard of hearing Twitter user @_CodeNameT1M said: “Wait, are you f*cking for real now? It’s unbelievable, most of the Videos I watch have subtitles which were community driven. This is not okay…”

Subtitles accessibility: The ins and outs on a global scale

Closed captioning or subtitling for video is mandated by law in some countries for traditional broadcast (practically speaking, captioning and subtitling mean virtually the same thing – although, technically, captioning is aimed at viewers who can’t hear the audio, and so may include indication of sound effects and music, too). The importance of accessibility to subtitles in these countries has clearly been recognised.

In the US, the Federal Communications Commission [FCC] has long maintained regulations requiring US broadcasters, producers and distributors provide closed captioning for deaf or hard-of-hearing viewers, and these now apply to most US online platforms. Video material produced by anyone receiving government funding must be accompanied by closed captioning. UK captioning laws were updated to include video-on-demand content in 2017.

I’ve tried to caption a video on Facebook. It is a nightmare

Backlash

There was a flurry of backlash against the YouTube community captioning shutdown from hearing-disability advocates and YouTubers, who see it as an important tool in a digital world that is uneven in terms of accessibility.

The “community” side of YouTube’s “community contributions” has become just as valuable to creators and to viewers – the crowd-sourcing aspect allowed for genuine collaboration on content. This was particularly the case in multi-language subtitling, with bilingual users subtitling content of their favourite influencers, bands (K-pop fans feature prominently) and educational content. Captioning is supplied by fans as a channel’s following grows

VISUAL AID: Online video is an ever-growing content industry, but those with hearing difficulties are not always catered for

With the YouTube Community Captions removal, subtitling is now the responsibility of the YouTube publisher, and with AI taking over the captioning space, a lot of this is easily done. But will it be done? Will creators, companies, organisations and institutions, who were happy to toggle on the option for community captions, happily take the extra time to create their own? Some YouTubers are on such frantic schedules, even copying and pasting AI-generated text might be a workflow step too far.

The bigger issue this YouTube change points to is how hard-of-hearing viewers are subject to the whims of tech companies and content makers. It’s perfectly accurate for YouTube to note that the users of the community caption service were very tiny. The platform received a letter asking it to keep the service running, signed by 500,000 people – that’s .03% of YouTube’s roughly two billion users.

In an interview for the BBC (https://www.bbc.co.uk/news/newsbeat-54074573), mildly deaf journalist and campaigner Liam O’Dell responded to the YouTube changes and assessed the captioning capabilities of social media platforms generally: “If you’re talking about the big players like Facebook, Twitter, Instagram and TikTok, I’d say they’re still pretty dire. I’ve tried to caption a video on Facebook.It is a nightmare.”

Online video has been called a universal language, but unless captioning is made fully and easily available to creators and audiences, that promise will remain unfulfilled.

Clean captions

Though broadcasters might be meeting their captioning requirements legally, they don’t always excel in execution. Anyone who has looked at captioning of live events or news will remember instances of hilarious – or irritating – miscaptioning. The fact that this hasn’t improved hugely in recent years suggests to viewers that getting captioning right may not be the highest priority, even among major content providers.

Some new legislation is mandating that captioning adheres to a minimum quality. An AI-created word salad, only loosely in sync with the picture, may not be passable any more.

“Since about 2005, the US upped its game for captioning,” explains sales and marketing SVP at US captions and subtitling tech provider Digital Nirvana, Russell Wise. Their services include captioning, transcription and language localisation. “For the most part, it’s been ‘best effort’ in terms of quality, but there’s no real QA on that, for the most part. In the US, if the captioning is not there, you get a big fine; if it is there, you’re good to go.

“But every country has its own regulations, and it’s starting to evolve again. We’re seeing regulations in Canada that are very specific about the quality – and it’s audited. We’ve also seen that in India. In these cases, the onus is on the content provider to prove that the captioning is high quality and meeting government standards.”

Digital Nirvana is just one company offering AI-enhanced solutions for helping broadcasters assess the quality of their captioning in real time. The need for both speed and quality in captioning is an additional pressure for broadcasters, especially since captioning takes place at the very end of the workflow. It can be the last aspect that still needs to be added – and executed well – while the content is getting pushed out the door.

One of the basic methods of captioning is doing a speech to transcript conversion – often with the automated help of AI – then that transcript is converted into captioning.

The onus is on the content provider to prove that the captioning is high quality

“But how do you get the quality?” asks Wise. “Speech to text is only so good, about 90 or 95% correct. So, we either use human intervention to correct that, or give customers a workflow that they can look at confidence scoring on the words and do things like autocorrection.”

Captions need to be formatted properly, too, and each platform may have its own peculiar style guide for formatting. Tools like Digital Nirvana’s can help automate the process, incorporating the appropriate style guide into the workflow. This can be an aid in submitting content to Netflix, for example, who are very particular about their captioning quality. Digital Nirvana also has tools for doing QA at the end of the process.

Universal languages

The pandemic has slowed down production radically. Companies have reached into archived content, which has occasionally resulted in unexpectedly rich content offerings – clip-based shows, nostalgia TV and pop-up channels based on specific content types. Some of this might have only received basic captioning, some none at all, so companies are trying to find good tools to let them do these at scale.

Foreign language subtitling is also an essential part of increasing the potential audience for this new content (many English speakers watched K-Drama in lockdown). Again, easy-to-use tech is vital if these releases are to be done quickly and to the highest quality. It’s probably safe to say that, without an AI boost, a lot of the revived content exchanged globally over the last year would still be waiting for sign-off.

Most videos published or livestreamed on YouTube, Facebook or Twitch will not get this kind of care – at least not for a while. But Netflix-quality captioning for social media is something we should aspire to. With the right mix of technology, workflows and regulation, there’s no reason high-quality captioning shouldn’t be an an option on every platform.

Hear this!

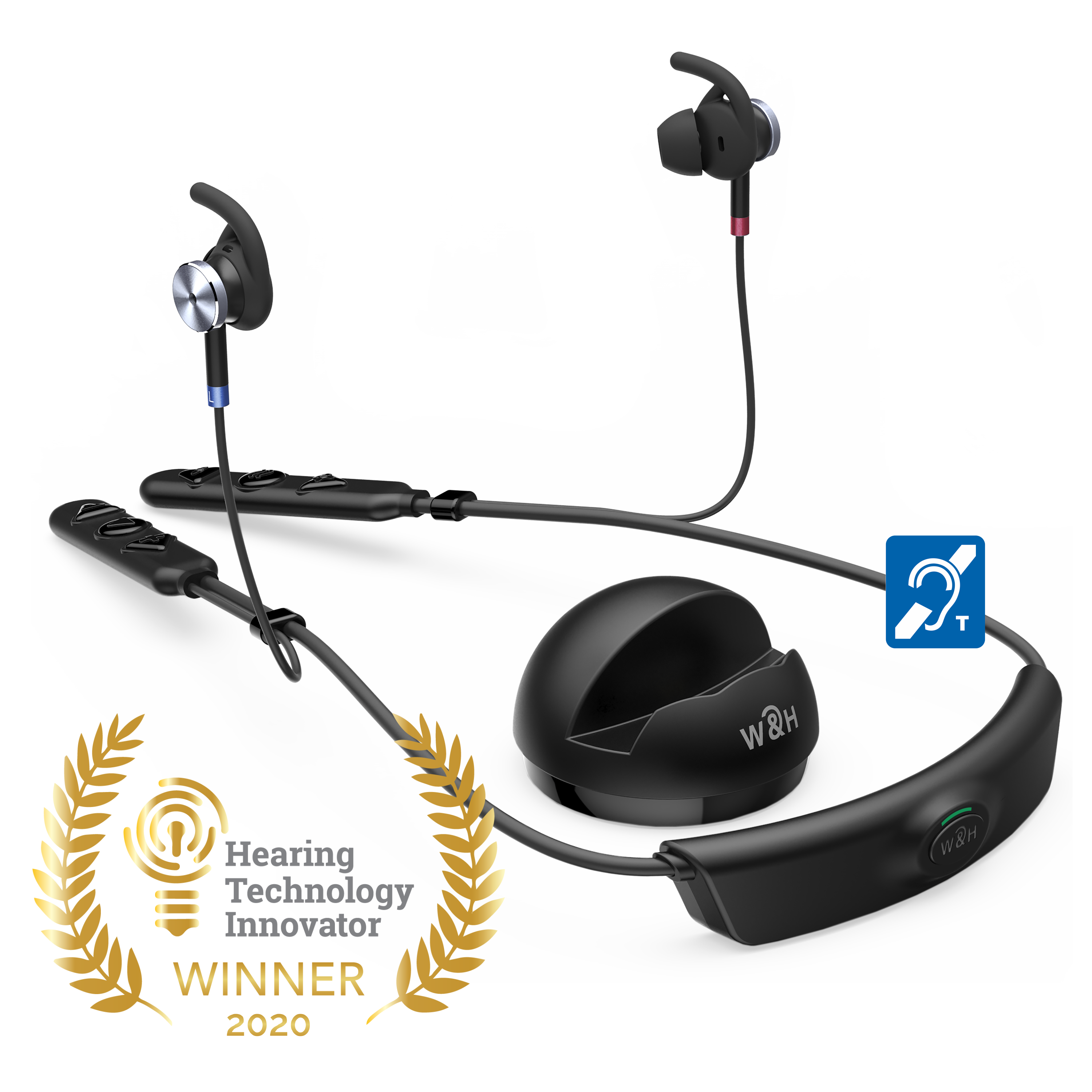

One company is leading the way in improving audio access for the hearing impaired

You really want to hear that YouTube content – the life hack that’s going to change everything. But it was recorded badly, the publisher hasn’t mixed it well, and you just can’t hear exactly what your favourite guru is saying. You turn up your iPhone, but Apple has designed the loudness settings to keep you from shattering your eardrums. Then, of course, there are no video subtitles. So, you watch it at your desktop computer with the BIG SPEAKERS and see how that goes.

It’s really irritating when that happens. But if you’re hard of hearing, this is what a regular day on the internet can be like. Fortunately, there are hearing assistive devices making content more accessible.

Audio communications company, Alango Technologies, is headquartered in Haifa, Israel, and has turned its focus to technologies improving access for the hearing impaired. The company’s BeHear ACCESS assistive hearing device was an honouree at the 2020 CES Innovation Awards. Their philosophy is that hearing impairment is a more universal phenomenon than most people readily admit, and use of hearing assistive technology should be as common as getting glasses.

Accessing audio isn’t entirely a matter of loudness or frequency enhancement. Sometimes the speed of speech makes it difficult to understand for people with age-related hearing loss, but also those with cognitive disabilities or language barriers. Even those who need extra time to write down information as they hear it.

One of Alango’s technologies, Easy Listen, slows down incoming speech in real time.

“People with a hearing disability have difficulties listening to fast talkers and, eventually, at some age, all talkers seem fast,” explains Alango founder and CEO, Dr Alexander Goldin. “We can slow down speech and make it more intelligible. It’s a unique technology you cannot find today, even in expensive hearing aids.” Easy Listen was designed for older people, who may have dexterity problems, with large buttons and simple controls.

The company’s Easy Watch technology allows viewers to understand fast talkers on TV, movies and streaming video by dynamically and selectively slowing down both the audio and video streams.

“One problem with hearing loss is that many people are not aware of having it,” says Goldin. “It takes, on average, seven years from the onset of the problem to the point where people become aware. And another three years when they start actually looking for help.”

To shorten this time, Alango has developed a hearing assessment kiosk, which can be put in waiting rooms or pharmacies to assess hearing – then provide customised audio feedback to give people an experience of what they are missing.

“Becoming aware of the problem is not enough to look for help. You need to know what you are missing. Hearing isn’t like vision – I can immediately understand that I can’t read a book or see a traffic sign. But if I don’t hear birds outside, they simply don’t exist for me. At our kiosk, we play video clips and allow people to switch the hearing amplification on and off.”

People with a hearing disability have difficulties listening to fast talkers and, eventually, at some age, all talkers seem fast

Getting the right solution in compensating for hearing loss isn’t just a matter of increasing loudness. Most gradual hearing loss is selective along specific frequencies. Increasing loudness brings up other frequencies, which may allow a person to fill in the gaps of what they are not hearing, but those missing frequencies are still likely to remain vacant. Alango uses a technology called multi-channel dynamic range processing. This takes into account all parts of the auditory system and tries to make up for it using the frequencies a person is still able to hear.

“Hearing loss cannot be compensated for completely. But we’re trying to get as close as possible.”

Alango’s technologies are designed for TVs and technologies people use every day. They have begun conversations with tech manufacturers about how to best integrate these new assistive features more widely.

Given the capabilities of most modern devices, we may eventually see smart devices taking into account that the ability to access video and audio is less consistent than we assume. A device integrated with its own hearing assessments – and automatic compensation for it – could be just around the corner.

This first featured in the Spring 2021 issue of FEED magazine.

Check out our Summer issue Start-up, Narrativa, focusing on AI subtitling.

Or take a look at our Winter issue subtitle start up, Subly.